The brain, despite its comparatively shallow structure with limited layers, operates efficiently, whereas modern AI systems are characterized by deep architectures with numerous layers. This raises the question: Can brain-inspired shallow architectures rival the performance of deep architectures, and if so, what are the fundamental mechanisms that enable this?

Neural network learning methods are inspired by the brain’s functioning, yet there are fundamental differences between how the brain learns and how deep learning operates. A key distinction lies in the number of layers each employs.

Deep learning systems often have many layers, sometimes extending into the hundreds, which allows them to effectively learn complex classification tasks. In contrast, the human brain has a much simpler structure with far fewer layers. Despite its relatively shallow architecture and the slower, noisier nature of its processes, the brain is remarkably adept at handling complex classification tasks efficiently.

Research on Shallow Learning Mechanisms in the Brain

The key question driving new research is the possible mechanism underlying the brain’s efficient shallow learning — one that enables it to perform classification tasks with the same accuracy as deep learning. In an article published in Physica A, researchers from Bar-Ilan University in Israel show how such shallow learning mechanisms can compete with deep learning.

Credit: Prof. Ido Kanter, Bar-Ilan University

“Instead of a deep architecture, like a skyscraper, the brain consists of a wide shallow architecture, more like a very wide building with only very few floors,” said Prof. Ido Kanter, of Bar-Ilan’s Department of Physics and Gonda (Goldschmied) Multidisciplinary Brain Research Center, who led the research.

“The capability to correctly classify objects increases where the architecture becomes deeper, with more layers. In contrast, the brain’s shallow mechanism indicates that a wider network better classifies objects,” said Ronit Gross, an undergraduate student and one of the key contributors to this work.

“Wider and higher architectures represent two complementary mechanisms,” she added. Nevertheless, the realization of very wide shallow architectures, imitating the brain’s dynamics, requires a shift in the properties of advanced GPU technology, which is capable of accelerating deep architecture, but fails in the implementation of wide shallow ones.

Reference: “Efficient shallow learning mechanism as an alternative to deep learning” by Ofek Tevet, Ronit D. Gross, Shiri Hodassman, Tal Rogachevsky, Yarden Tzach, Yuval Meir and Ido Kanter, 11 January 2024, Physica A: Statistical Mechanics and its Applications.

DOI: 10.1016/j.physa.2024.129513

News

3D-printed implant offers a potential new route to repair spinal cord injuries

A research team at RCSI University of Medicine and Health Sciences has developed a 3-D printed implant to deliver electrical stimulation to injured areas of the spinal cord, offering a potential new route to [...]

Nanocrystals Carrying Radioisotopes Offer New Hope for Cancer Treatment

The Science Scientists have developed tiny nanocrystal particles made up of isotopes of the elements lanthanum, vanadium, and oxygen for use in treating cancer. These crystals are smaller than many microbes and can carry isotopes of [...]

New Once-a-Week Shot Promises Life-Changing Relief for Parkinson’s Patients

A once-a-week shot from Australian scientists could spare people with Parkinson’s the grind of taking pills several times a day. The tiny, biodegradable gel sits under the skin and releases steady doses of two [...]

Weekly injectable drug offers hope for Parkinson’s patients

A new weekly injectable drug could transform the lives of more than eight million people living with Parkinson's disease, potentially replacing the need for multiple daily tablets. Scientists from the University of South Australia [...]

Most Plastic in the Ocean Is Invisible—And Deadly

Nanoplastics—particles smaller than a human hair—can pass through cell walls and enter the food web. New research suggest 27 million metric tons of nanoplastics are spread across just the top layer of the North [...]

Repurposed drugs could calm the immune system’s response to nanomedicine

An international study led by researchers at the University of Colorado Anschutz Medical Campus has identified a promising strategy to enhance the safety of nanomedicines, advanced therapies often used in cancer and vaccine treatments, [...]

Nano-Enhanced Hydrogel Strategies for Cartilage Repair

A recent article in Engineering describes the development of a protein-based nanocomposite hydrogel designed to deliver two therapeutic agents—dexamethasone (Dex) and kartogenin (KGN)—to support cartilage repair. The hydrogel is engineered to modulate immune responses and promote [...]

New Cancer Drug Blocks Tumors Without Debilitating Side Effects

A new drug targets RAS-PI3Kα pathways without harmful side effects. It was developed using high-performance computing and AI. A new cancer drug candidate, developed through a collaboration between Lawrence Livermore National Laboratory (LLNL), BridgeBio Oncology [...]

Scientists Are Pretty Close to Replicating the First Thing That Ever Lived

For 400 million years, a leading hypothesis claims, Earth was an “RNA World,” meaning that life must’ve first replicated from RNA before the arrival of proteins and DNA. Unfortunately, scientists have failed to find [...]

Why ‘Peniaphobia’ Is Exploding Among Young People (And Why We Should Be Concerned)

An insidious illness is taking hold among a growing proportion of young people. Little known to the general public, peniaphobia—the fear of becoming poor—is gaining ground among teens and young adults. Discover the causes [...]

Team finds flawed data in recent study relevant to coronavirus antiviral development

The COVID pandemic illustrated how urgently we need antiviral medications capable of treating coronavirus infections. To aid this effort, researchers quickly homed in on part of SARS-CoV-2's molecular structure known as the NiRAN domain—an [...]

Drug-Coated Neural Implants Reduce Immune Rejection

Summary: A new study shows that coating neural prosthetic implants with the anti-inflammatory drug dexamethasone helps reduce the body’s immune response and scar tissue formation. This strategy enhances the long-term performance and stability of electrodes [...]

Scientists discover cancer-fighting bacteria that ‘soak up’ forever chemicals in the body

A family of healthy bacteria may help 'soak up' toxic forever chemicals in the body, warding off their cancerous effects. Forever chemicals, also known as PFAS (per- and polyfluoroalkyl substances), are toxic chemicals that [...]

Johns Hopkins Researchers Uncover a New Way To Kill Cancer Cells

A new study reveals that blocking ribosomal RNA production rewires cancer cell behavior and could help treat genetically unstable tumors. Researchers at the Johns Hopkins Kimmel Cancer Center and the Department of Radiation Oncology and Molecular [...]

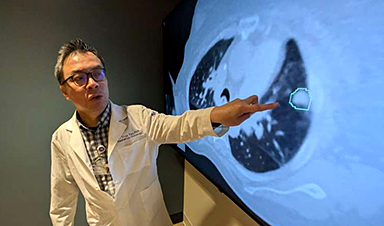

AI matches doctors in mapping lung tumors for radiation therapy

In radiation therapy, precision can save lives. Oncologists must carefully map the size and location of a tumor before delivering high-dose radiation to destroy cancer cells while sparing healthy tissue. But this process, called [...]

Scientists Finally “See” Key Protein That Controls Inflammation

Researchers used advanced microscopy to uncover important protein structures. For the first time, two important protein structures in the human body are being visualized, thanks in part to cutting-edge technology at the University of [...]