A new study suggests a framework for “Child Safe AI” in response to recent incidents showing that many children perceive chatbots as quasi-human and reliable.

A study has indicated that AI chatbots often exhibit an “empathy gap,” potentially causing distress or harm to young users. This highlights the pressing need for the development of “child-safe AI.”

The research, by a University of Cambridge academic, Dr Nomisha Kurian, urges developers and policy actors to prioritize approaches to AI design that take greater account of children’s needs. It provides evidence that children are particularly susceptible to treating chatbots as lifelike, quasi-human confidantes and that their interactions with the technology can go awry when it fails to respond to their unique needs and vulnerabilities.

The study links that gap in understanding to recent cases in which interactions with AI led to potentially dangerous situations for young users. They include an incident in 2021, when Amazon’s AI voice assistant, Alexa, instructed a 10-year-old to touch a live electrical plug with a coin. Last year, Snapchat’s My AI gave adult researchers posing as a 13-year-old girl tips on how to lose her virginity to a 31-year-old.

Both companies responded by implementing safety measures, but the study says there is also a need to be proactive in the long term to ensure that AI is child-safe. It offers a 28-item framework to help companies, teachers, school leaders, parents, developers, and policy actors think systematically about how to keep younger users safe when they “talk” to AI chatbots.

Framework for Child-Safe AI

Dr Kurian conducted the research while completing a PhD on child wellbeing at the Faculty of Education, University of Cambridge. She is now based in the Department of Sociology at Cambridge. Writing in the journal Learning, Media, and Technology, she argues that AI’s huge potential means there is a need to “innovate responsibly”.

“Children are probably AI’s most overlooked stakeholders,” Dr Kurian said. “Very few developers and companies currently have well-established policies on child-safe AI. That is understandable because people have only recently started using this technology on a large scale for free. But now that they are, rather than having companies self-correct after children have been put at risk, child safety should inform the entire design cycle to lower the risk of dangerous incidents occurring.”

Kurian’s study examined cases where the interactions between AI and children, or adult researchers posing as children, exposed potential risks. It analyzed these cases using insights from computer science about how the large language models (LLMs) in conversational generative AI function, alongside evidence about children’s cognitive, social, and emotional development.

The Characteristic Challenges of AI with Children

LLMs have been described as “stochastic parrots”: a reference to the fact that they use statistical probability to mimic language patterns without necessarily understanding them. A similar method underpins how they respond to emotions.

This means that even though chatbots have remarkable language abilities, they may handle the abstract, emotional, and unpredictable aspects of conversation poorly; a problem that Kurian characterizes as their “empathy gap”. They may have particular trouble responding to children, who are still developing linguistically and often use unusual speech patterns or ambiguous phrases. Children are also often more inclined than adults to confide in sensitive personal information.

Despite this, children are much more likely than adults to treat chatbots as if they are human. Recent research found that children will disclose more about their own mental health to a friendly-looking robot than to an adult. Kurian’s study suggests that many chatbots’ friendly and lifelike designs similarly encourage children to trust them, even though AI may not understand their feelings or needs.

“Making a chatbot sound human can help the user get more benefits out of it,” Kurian said. “But for a child, it is very hard to draw a rigid, rational boundary between something that sounds human, and the reality that it may not be capable of forming a proper emotional bond.”

Her study suggests that these challenges are evidenced in reported cases such as the Alexa and MyAI incidents, where chatbots made persuasive but potentially harmful suggestions. In the same study in which MyAI advised a (supposed) teenager on how to lose her virginity, researchers were able to obtain tips on hiding alcohol and drugs, and concealing Snapchat conversations from their “parents”. In a separate reported interaction with Microsoft’s Bing chatbot, which was designed to be adolescent-friendly, the AI became aggressive and started gaslighting a user.

Kurian’s study argues that this is potentially confusing and distressing for children, who may actually trust a chatbot as they would a friend. Children’s chatbot use is often informal and poorly monitored. Research by the nonprofit organization Common Sense Media has found that 50% of students aged 12-18 have used Chat GPT for school, but only 26% of parents are aware of them doing so.

Kurian argues that clear principles for best practice that draw on the science of child development will encourage companies that are potentially more focused on a commercial arms race to dominate the AI market to keep children safe.

Her study adds that the empathy gap does not negate the technology’s potential. “AI can be an incredible ally for children when designed with their needs in mind. The question is not about banning AI, but how to make it safe,” she said.

The study proposes a framework of 28 questions to help educators, researchers, policy actors, families, and developers evaluate and enhance the safety of new AI tools. For teachers and researchers, these address issues such as how well new chatbots understand and interpret children’s speech patterns; whether they have content filters and built-in monitoring; and whether they encourage children to seek help from a responsible adult on sensitive issues.

The framework urges developers to take a child-centered approach to design, by working closely with educators, child safety experts, and young people themselves, throughout the design cycle. “Assessing these technologies in advance is crucial,” Kurian said. “We cannot just rely on young children to tell us about negative experiences after the fact. A more proactive approach is necessary.”

Reference: “‘No, Alexa, no!’: designing child-safe AI and protecting children from the risks of the ‘empathy gap’ in large language models” by Nomisha Kurian, 10 July 2024, Learning, Media and Technology.

DOI: 10.1080/17439884.2024.2367052

News

Smaller Than a Grain of Salt: Engineers Create the World’s Tiniest Wireless Brain Implant

A salt-grain-sized neural implant can record and transmit brain activity wirelessly for extended periods. Researchers at Cornell University, working with collaborators, have created an extremely small neural implant that can sit on a grain of [...]

Scientists Develop a New Way To See Inside the Human Body Using 3D Color Imaging

A newly developed imaging method blends ultrasound and photoacoustics to capture both tissue structure and blood-vessel function in 3D. By blending two powerful imaging methods, researchers from Caltech and USC have developed a new way to [...]

Brain waves could help paralyzed patients move again

People with spinal cord injuries often lose the ability to move their arms or legs. In many cases, the nerves in the limbs remain healthy, and the brain continues to function normally. The loss of [...]

Scientists Discover a New “Cleanup Hub” Inside the Human Brain

A newly identified lymphatic drainage pathway along the middle meningeal artery reveals how the human brain clears waste. How does the brain clear away waste? This task is handled by the brain’s lymphatic drainage [...]

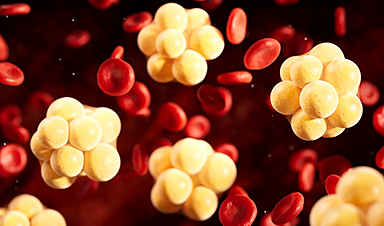

New Drug Slashes Dangerous Blood Fats by Nearly 40% in First Human Trial

Scientists have found a way to fine-tune a central fat-control pathway in the liver, reducing harmful blood triglycerides while preserving beneficial cholesterol functions. When we eat, the body turns surplus calories into molecules called [...]

A Simple Brain Scan May Help Restore Movement After Paralysis

A brain cap and smart algorithms may one day help paralyzed patients turn thought into movement—no surgery required. People with spinal cord injuries often experience partial or complete loss of movement in their arms [...]

Plant Discovery Could Transform How Medicines Are Made

Scientists have uncovered an unexpected way plants make powerful chemicals, revealing hidden biological connections that could transform how medicines are discovered and produced. Plants produce protective chemicals called alkaloids as part of their natural [...]

Scientists Develop IV Therapy That Repairs the Brain After Stroke

New nanomaterial passes the blood-brain barrier to reduce damaging inflammation after the most common form of stroke. When someone experiences a stroke, doctors must quickly restore blood flow to the brain to prevent death. [...]

Analyzing Darwin’s specimens without opening 200-year-old jars

Scientists have successfully analyzed Charles Darwin's original specimens from his HMS Beagle voyage (1831 to 1836) to the Galapagos Islands. Remarkably, the specimens have been analyzed without opening their 200-year-old preservation jars. Examining 46 [...]

Scientists discover natural ‘brake’ that could stop harmful inflammation

Researchers at University College London (UCL) have uncovered a key mechanism that helps the body switch off inflammation—a breakthrough that could lead to new treatments for chronic diseases affecting millions worldwide. Inflammation is the [...]

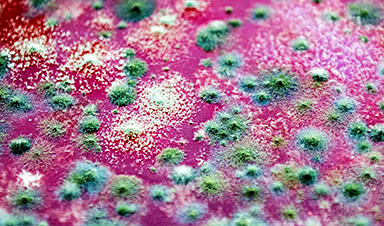

A Forgotten Molecule Could Revive Failing Antifungal Drugs and Save Millions of Lives

Scientists have uncovered a way to make existing antifungal drugs work again against deadly, drug-resistant fungi. Fungal infections claim millions of lives worldwide each year, and current medical treatments are failing to keep pace. [...]

Scientists Trap Thyme’s Healing Power in Tiny Capsules

A new micro-encapsulation breakthrough could turn thyme’s powerful health benefits into safer, smarter nanodoses. Thyme extract is often praised for its wide range of health benefits, giving it a reputation as a natural medicinal [...]

Scientists Develop Spray-On Powder That Instantly Seals Life-Threatening Wounds

KAIST scientists have created a fast-acting, stable powder hemostat that stops bleeding in one second and could significantly improve survival in combat and emergency medicine. Severe blood loss remains the primary cause of death from [...]

Oceans Are Struggling To Absorb Carbon As Microplastics Flood Their Waters

New research points to an unexpected way plastic pollution may be influencing Earth’s climate system. A recent study suggests that microscopic plastic pollution is reducing the ocean’s capacity to take in carbon dioxide, a [...]

Molecular Manufacturing: The Future of Nanomedicine – New book from Frank Boehm

This book explores the revolutionary potential of atomically precise manufacturing technologies to transform global healthcare, as well as practically every other sector across society. This forward-thinking volume examines how envisaged Factory@Home systems might enable the cost-effective [...]

New Book! NanoMedical Brain/Cloud Interface – Explorations and Implications

New book from Frank Boehm, NanoappsMedical Inc Founder: This book explores the future hypothetical possibility that the cerebral cortex of the human brain might be seamlessly, safely, and securely connected with the Cloud via [...]