Every time we ask an AI a question, it doesn't just return an answer—it also burns energy and emits carbon dioxide.

German researchers found that some "thinking" AI models, which generate long, step-by-step reasoning before answering, can emit up to 50 times more CO₂ than models that give short, direct responses. These emissions don't always lead to better answers, either.

AI Answers Come at a Hidden Environmental Cost

No matter what you ask an AI, it will always generate an answer. In order to do this, whether the response is accurate or not, the system relies on tokens. These tokens are made up of words or fragments of words that are transformed into numerical data so the AI model can process them.

That process, along with the broader computing involved, results in carbon dioxide (CO2) emissions. Yet most people are unaware that using AI tools comes with a significant carbon footprint. To better understand the impact, researchers in Germany analyzed and compared the emissions of several pre-trained large language models (LLMs) using a consistent set of questions.

"The environmental impact of questioning trained LLMs is strongly determined by their reasoning approach, with explicit reasoning processes significantly driving up energy consumption and carbon emissions," said first author Maximilian Dauner, a researcher at Hochschule München University of Applied Sciences and first author of the Frontiers in Communication study. "We found that reasoning-enabled models produced up to 50 times more CO₂ emissions than concise response models."

Reasoning Models Burn More Carbon, Not Always for Better Answers

The team tested 14 different LLMs, each ranging from seven to 72 billion parameters, using 1,000 standardized questions from a variety of subjects. Parameters determine how a model learns and makes decisions.

On average, models built for reasoning produced 543.5 additional "thinking" tokens per question, compared to just 37.7 tokens from models that give brief answers. These thinking tokens are the extra internal content generated by the model before it settles on a final answer. More tokens always mean higher CO₂ emissions, but that doesn't always translate into better results. Extra detail may not improve the accuracy of the answer, even though it increases the environmental cost.

Accuracy vs. Sustainability: A New AI Trade-Off

The most accurate model was the reasoning-enabled Cogito model with 70 billion parameters, reaching 84.9% accuracy. The model produced three times more CO2 emissions than similar sized models that generated concise answers. "Currently, we see a clear accuracy-sustainability trade-off inherent in LLM technologies," said Dauner. "None of the models that kept emissions below 500 grams of CO₂ equivalent achieved higher than 80% accuracy on answering the 1,000 questions correctly." CO2 equivalent is the unit used to measure the climate impact of various greenhouse gases.

Subject matter also resulted in significantly different levels of CO2 emissions. Questions that required lengthy reasoning processes, for example abstract algebra or philosophy, led to up to six times higher emissions than more straightforward subjects, like high school history.

How to Prompt Smarter (and Greener)

The researchers said they hope their work will cause people to make more informed decisions about their own AI use. "Users can significantly reduce emissions by prompting AI to generate concise answers or limiting the use of high-capacity models to tasks that genuinely require that power," Dauner pointed out.

Choice of model, for instance, can make a significant difference in CO2 emissions. For example, having DeepSeek R1 (70 billion parameters) answer 600,000 questions would create CO2 emissions equal to a round-trip flight from London to New York. Meanwhile, Qwen 2.5 (72 billion parameters) can answer more than three times as many questions (about 1.9 million) with similar accuracy rates while generating the same emissions.

The researchers said that their results may be impacted by the choice of hardware used in the study, an emission factor that may vary regionally depending on local energy grid mixes, and the examined models. These factors may limit the generalizability of the results.

"If users know the exact CO₂ cost of their AI-generated outputs, such as casually turning themselves into an action figure, they might be more selective and thoughtful about when and how they use these technologies," Dauner concluded.

Reference: "Energy costs of communicating with AI" by Maximilian Dauner and Gudrun Socher, 30 April 2025, Frontiers in Communication.

DOI: 10.3389/fcomm.2025.1572947

News

Deadly Pancreatic Cancer Found To “Wire Itself” Into the Body’s Nerves

A newly discovered link between pancreatic cancer and neural signaling reveals a promising drug target that slows tumor growth by blocking glutamate uptake. Pancreatic cancer is among the most deadly cancers, and scientists are [...]

This Simple Brain Exercise May Protect Against Dementia for 20 Years

A long-running study following thousands of older adults suggests that a relatively brief period of targeted brain training may have effects that last decades. Starting in the late 1990s, close to 3,000 older adults [...]

Scientists Crack a 50-Year Tissue Mystery With Major Cancer Implications

Researchers have resolved a 50-year-old scientific mystery by identifying the molecular mechanism that allows tissues to regenerate after severe damage. The discovery could help guide future treatments aimed at reducing the risk of cancer [...]

This New Blood Test Can Detect Cancer Before Tumors Appear

A new CRISPR-powered light sensor can detect the faintest whispers of cancer in a single drop of blood. Scientists have created an advanced light-based sensor capable of identifying extremely small amounts of cancer biomarkers [...]

Blindness Breakthrough? This Snail Regrows Eyes in 30 Days

A snail that regrows its eyes may hold the genetic clues to restoring human sight. Human eyes are intricate organs that cannot regrow once damaged. Surprisingly, they share key structural features with the eyes [...]

This Is Why the Same Virus Hits People So Differently

Scientists have mapped how genetics and life experiences leave lasting epigenetic marks on immune cells. The discovery helps explain why people respond so differently to the same infections and could lead to more personalized [...]

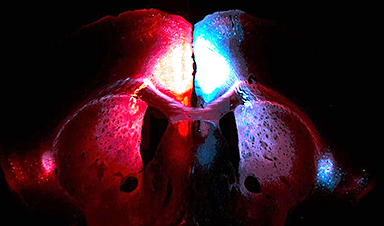

Rejuvenating neurons restores learning and memory in mice

EPFL scientists report that briefly switching on three “reprogramming” genes in a small set of memory-trace neurons restored memory in aged mice and in mouse models of Alzheimer’s disease to level of healthy young [...]

New book from Nanoappsmedical Inc. – Global Health Care Equivalency

A new book by Frank Boehm, NanoappsMedical Inc. Founder. This groundbreaking volume explores the vision of a Global Health Care Equivalency (GHCE) system powered by artificial intelligence and quantum computing technologies, operating on secure [...]

New Molecule Blocks Deadliest Brain Cancer at Its Genetic Root

Researchers have identified a molecule that disrupts a critical gene in glioblastoma. Scientists at the UVA Comprehensive Cancer Center say they have found a small molecule that can shut down a gene tied to glioblastoma, a [...]

Scientists Finally Solve a 30-Year-Old Cancer Mystery Hidden in Rye Pollen

Nearly 30 years after rye pollen molecules were shown to slow tumor growth in animals, scientists have finally determined their exact three-dimensional structures. Nearly 30 years ago, researchers noticed something surprising in rye pollen: [...]

NanoMedical Brain/Cloud Interface – Explorations and Implications. A new book from Frank Boehm

New book from Frank Boehm, NanoappsMedical Inc Founder: This book explores the future hypothetical possibility that the cerebral cortex of the human brain might be seamlessly, safely, and securely connected with the Cloud via [...]

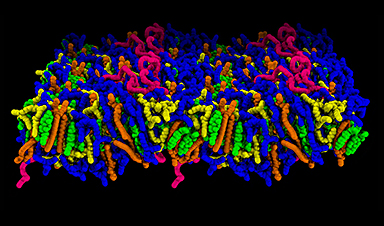

How lipid nanoparticles carrying vaccines release their cargo

A study from FAU has shown that lipid nanoparticles restructure their membrane significantly after being absorbed into a cell and ending up in an acidic environment. Vaccines and other medicines are often packed in [...]

New book from NanoappsMedical Inc – Molecular Manufacturing: The Future of Nanomedicine

This book explores the revolutionary potential of atomically precise manufacturing technologies to transform global healthcare, as well as practically every other sector across society. This forward-thinking volume examines how envisaged Factory@Home systems might enable the cost-effective [...]

A Virus Designed in the Lab Could Help Defeat Antibiotic Resistance

Scientists can now design bacteria-killing viruses from DNA, opening a faster path to fighting superbugs. Bacteriophages have been used as treatments for bacterial infections for more than a century. Interest in these viruses is rising [...]

Sleep Deprivation Triggers a Strange Brain Cleanup

When you don’t sleep enough, your brain may clean itself at the exact moment you need it to think. Most people recognize the sensation. After a night of inadequate sleep, staying focused becomes harder [...]

Lab-grown corticospinal neurons offer new models for ALS and spinal injuries

Researchers have developed a way to grow a highly specialized subset of brain nerve cells that are involved in motor neuron disease and damaged in spinal injuries. Their study, published today in eLife as the final [...]