Medical diagnostics expert, doctor's assistant, and cartographer are all fair titles for an artificial intelligence model developed by researchers at the Beckman Institute for Advanced Science and Technology.

Their new model accurately identifies tumors and diseases in medical images and is programmed to explain each diagnosis with a visual map. The tool's unique transparency allows doctors to easily follow its line of reasoning, double-check for accuracy, and explain the results to patients.

"The idea is to help catch cancer and disease in its earliest stages — like an X on a map — and understand how the decision was made. Our model will help streamline that process and make it easier on doctors and patients alike," said Sourya Sengupta, the study's lead author and a graduate research assistant at the Beckman Institute.

This research appeared in IEEE Transactions on Medical Imaging.

Cats and dogs and onions and ogres

First conceptualized in the 1950s, artificial intelligence — the concept that computers can learn to adapt, analyze, and problem-solve like humans do — has reached household recognition, due in part to ChatGPT and its extended family of easy-to-use tools.

Machine learning, or ML, is one of many methods researchers use to create artificially intelligent systems. ML is to AI what driver's education is to a 15-year-old: a controlled, supervised environment to practice decision-making, calibrating to new environments, and rerouting after a mistake or wrong turn.

Deep learning — machine learning's wiser and worldlier relative — can digest larger quantities of information to make more nuanced decisions. Deep learning models derive their decisive power from the closest computer simulations we have to the human brain: deep neural networks.

These networks — just like humans, onions, and ogres — have layers, which makes them tricky to navigate. The more thickly layered, or nonlinear, a network's intellectual thicket, the better it performs complex, human-like tasks.

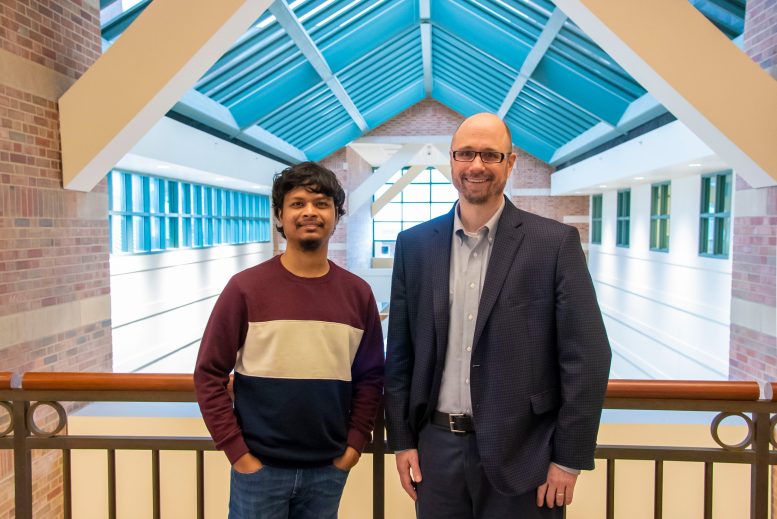

Researchers at the Beckman Institute led by Mark Anastasio (right) and Sourya Sengupta developed an artificial intelligence model that can accurately identify tumors and diseases in medical images. The tool draws a map to explain each diagnosis, helping doctors follow its line of reasoning, check for accuracy, and explain the results to patients. Credit: Jenna Kurtzweil, Beckman Institute Communications Office

Consider a neural network trained to differentiate between pictures of cats and pictures of dogs. The model learns by reviewing images in each category and filing away their distinguishing features (like size, color, and anatomy) for future reference. Eventually, the model learns to watch out for whiskers and cry Doberman at the first sign of a floppy tongue.

But deep neural networks are not infallible — much like overzealous toddlers, said Sengupta, who studies biomedical imaging in the University of Illinois Urbana-Champaign Department of Electrical and Computer Engineering.

"They get it right sometimes, maybe even most of the time, but it might not always be for the right reasons," he said. "I'm sure everyone knows a child who saw a brown, four-legged dog once and then thought that every brown, four-legged animal was a dog."

Sengupta's gripe? If you ask a toddler how they decided, they will probably tell you.

"But you can't ask a deep neural network how it arrived at an answer," he said.

The black box problem

Sleek, skilled, and speedy as they may be, deep neural networks struggle to master the seminal skill drilled into high school calculus students: showing their work. This is referred to as the black box problem of artificial intelligence, and it has baffled scientists for years.

On the surface, coaxing a confession from the reluctant network that mistook a Pomeranian for a cat does not seem unbelievably crucial. But the gravity of the black box sharpens as the images in question become more life-altering. For example: X-ray images from a mammogram that may indicate early signs of breast cancer.

The process of decoding medical images looks different in different regions of the world.

"In many developing countries, there is a scarcity of doctors and a long line of patients. AI can be helpful in these scenarios," Sengupta said.

When time and talent are in high demand, automated medical image screening can be deployed as an assistive tool — in no way replacing the skill and expertise of doctors, Sengupta said. Instead, an AI model can pre-scan medical images and flag those containing something unusual — like a tumor or early sign of disease, called a biomarker — for a doctor's review. This method saves time and can even improve the performance of the person tasked with reading the scan.

These models work well, but their bedside manner leaves much to be desired when, for example, a patient asks why an AI system flagged an image as containing (or not containing) a tumor.

Historically, researchers have answered questions like this with a slew of tools designed to decipher the black box from the outside in. Unfortunately, the researchers using them are often faced with a similar plight as the unfortunate eavesdropper, leaning against a locked door with an empty glass to their ear.

"It would be so much easier to simply open the door, walk inside the room, and listen to the conversation firsthand," Sengupta said.

To further complicate the matter, many variations of these interpretation tools exist. This means that any given black box may be interpreted in "plausible but different" ways, Sengupta said.

"And now the question is: which interpretation do you believe?" he said. "There is a chance that your choice will be influenced by your subjective bias, and therein lies the main problem with traditional methods."

Sengupta's solution? An entirely new type of AI model that interprets itself every time — that explains each decision instead of blandly reporting the binary of "tumor versus non-tumor," Sengupta said.

No water glass needed, in other words, because the door has disappeared.

Mapping the model

A yogi learning a new posture must practice it repeatedly. An AI model trained to tell cats from dogs studying countless images of both quadrupeds.

An AI model functioning as a doctor's assistant is raised on a diet of thousands of medical images, some with abnormalities and some without. When faced with something never-before-seen, it runs a quick analysis and spits out a number between 0 and 1. If the number is less than .5, the image is not assumed to contain a tumor; a numeral greater than .5 warrants a closer look.

Sengupta's new AI model mimics this setup with a twist: the model produces a value plus a visual map explaining its decision.

The map — referred to by the researchers as an equivalency map, or E-map for short — is essentially a transformed version of the original X-ray, mammogram, or other medical image medium. Like a paint-by-numbers canvas, each region of the E-map is assigned a number. The greater the value, the more medically interesting the region is for predicting the presence of an anomaly. The model sums up the values to arrive at its final figure, which then informs the diagnosis.

"For example, if the total sum is 1, and you have three values represented on the map — .5, .3, and .2 — a doctor can see exactly which areas on the map contributed more to that conclusion and investigate those more fully," Sengupta said.

This way, doctors can double-check how well the deep neural network is working — like a teacher checking the work on a student's math problem — and respond to patients' questions about the process.

"The result is a more transparent, trustable system between doctor and patient," Sengupta said.

X marks the spot

The researchers trained their model on three different disease diagnosis tasks including more than 20,000 total images.

First, the model reviewed simulated mammograms and learned to flag early signs of tumors. Second, it analyzed optical coherence tomography images of the retina, where it practiced identifying a buildup called Drusen that may be an early sign of macular degeneration. Third, the model studied chest X-rays and learned to detect cardiomegaly, a heart enlargement condition that can lead to disease.

Once the mapmaking model had been trained, the researchers compared its performance to existing black-box AI systems — the ones without a self-interpretation setting. The new model performed comparably to its counterparts in all three categories, with accuracy rates of 77.8% for mammograms, 99.1% for retinal OCT images, and 83% for chest X-rays compared to the existing 77.8%, 99.1%, and 83.33.%

These high accuracy rates are a product of the deep neural network, the non-linear layers of which mimic the nuance of human neurons.

To create such a complicated system, the researchers peeled the proverbial onion and drew inspiration from linear neural networks, which are simpler and easier to interpret.

"The question was: How can we leverage the concepts behind linear models to make non-linear deep neural networks also interpretable like this?" said principal investigator Mark Anastasio, a Beckman Institute researcher and the Donald Biggar Willet Professor and Head of the Illinois Department of Bioengineering. "This work is a classic example of how fundamental ideas can lead to some novel solutions for state-of-the-art AI models."

The researchers hope that future models will be able to detect and diagnose anomalies all over the body and even differentiate between them.

"I am excited about our tool's direct benefit to society, not only in terms of improving disease diagnoses but also improving trust and transparency between doctors and patients," Anastasio said.

Reference: "A Test Statistic Estimation-based Approach for Establishing Self-interpretable CNN-based Binary Classifiers" by Sourya Sengupta and Mark A. Anastasio, 1 January 2024, IEEE Transactions on Medical Imaging.

DOI: 10.1109/TMI.2023.3348699

News

This Is Why the Same Virus Hits People So Differently

Scientists have mapped how genetics and life experiences leave lasting epigenetic marks on immune cells. The discovery helps explain why people respond so differently to the same infections and could lead to more personalized [...]

Rejuvenating neurons restores learning and memory in mice

EPFL scientists report that briefly switching on three “reprogramming” genes in a small set of memory-trace neurons restored memory in aged mice and in mouse models of Alzheimer’s disease to level of healthy young [...]

New book from Nanoappsmedical Inc. – Global Health Care Equivalency

A new book by Frank Boehm, NanoappsMedical Inc. Founder. This groundbreaking volume explores the vision of a Global Health Care Equivalency (GHCE) system powered by artificial intelligence and quantum computing technologies, operating on secure [...]

New Molecule Blocks Deadliest Brain Cancer at Its Genetic Root

Researchers have identified a molecule that disrupts a critical gene in glioblastoma. Scientists at the UVA Comprehensive Cancer Center say they have found a small molecule that can shut down a gene tied to glioblastoma, a [...]

Scientists Finally Solve a 30-Year-Old Cancer Mystery Hidden in Rye Pollen

Nearly 30 years after rye pollen molecules were shown to slow tumor growth in animals, scientists have finally determined their exact three-dimensional structures. Nearly 30 years ago, researchers noticed something surprising in rye pollen: [...]

NanoMedical Brain/Cloud Interface – Explorations and Implications. A new book from Frank Boehm

New book from Frank Boehm, NanoappsMedical Inc Founder: This book explores the future hypothetical possibility that the cerebral cortex of the human brain might be seamlessly, safely, and securely connected with the Cloud via [...]

How lipid nanoparticles carrying vaccines release their cargo

A study from FAU has shown that lipid nanoparticles restructure their membrane significantly after being absorbed into a cell and ending up in an acidic environment. Vaccines and other medicines are often packed in [...]

New book from NanoappsMedical Inc – Molecular Manufacturing: The Future of Nanomedicine

This book explores the revolutionary potential of atomically precise manufacturing technologies to transform global healthcare, as well as practically every other sector across society. This forward-thinking volume examines how envisaged Factory@Home systems might enable the cost-effective [...]

A Virus Designed in the Lab Could Help Defeat Antibiotic Resistance

Scientists can now design bacteria-killing viruses from DNA, opening a faster path to fighting superbugs. Bacteriophages have been used as treatments for bacterial infections for more than a century. Interest in these viruses is rising [...]

Sleep Deprivation Triggers a Strange Brain Cleanup

When you don’t sleep enough, your brain may clean itself at the exact moment you need it to think. Most people recognize the sensation. After a night of inadequate sleep, staying focused becomes harder [...]

Lab-grown corticospinal neurons offer new models for ALS and spinal injuries

Researchers have developed a way to grow a highly specialized subset of brain nerve cells that are involved in motor neuron disease and damaged in spinal injuries. Their study, published today in eLife as the final [...]

Urgent warning over deadly ‘brain swelling’ virus amid fears it could spread globally

Airports across Asia have been put on high alert after India confirmed two cases of the deadly Nipah virus in the state of West Bengal over the past month. Thailand, Nepal and Vietnam are among the [...]

This Vaccine Stops Bird Flu Before It Reaches the Lungs

A new nasal spray vaccine could stop bird flu at the door — blocking infection, reducing spread, and helping head off the next pandemic. Since first appearing in the United States in 2014, H5N1 [...]

These two viruses may become the next public health threats, scientists say

Two emerging pathogens with animal origins—influenza D virus and canine coronavirus—have so far been quietly flying under the radar, but researchers warn conditions are ripe for the viruses to spread more widely among humans. [...]

COVID-19 viral fragments shown to target and kill specific immune cells

COVID-19 viral fragments shown to target and kill specific immune cells in UCLA-led study Clues about extreme cases and omicron’s effects come from a cross-disciplinary international research team New research shows that after the [...]

Smaller Than a Grain of Salt: Engineers Create the World’s Tiniest Wireless Brain Implant

A salt-grain-sized neural implant can record and transmit brain activity wirelessly for extended periods. Researchers at Cornell University, working with collaborators, have created an extremely small neural implant that can sit on a grain of [...]