Doctors experimenting with AI tools to help diagnose patients is nothing new. But getting them to trust the AIs they’re using is another matter entirely.

To establish that trust, researchers at Cornell University attempted to create a more transparent AI system that works by counseling doctors in the same way a human colleague would — that is, arguing over what the medical literature says.

Their resulting study, which will be presented at the Association for Computing Machinery Conference on Human Factors in Computing Systems later this month, found that how a medical AI works isn’t nearly as important to earning a doctor’s trust as the sources it cites in its suggestions.

“A doctor’s primary job is not to learn how AI works,” said Qian Yang, an assistant professor of information science at Cornell who led the study, in a press release. “If we can build systems that help validate AI suggestions based on clinical trial results and journal articles, which are trustworthy information for doctors, then we can help them understand whether the AI is likely to be right or wrong for each specific case.”

“We built a system that basically tries to recreate the interpersonal communication that we observed when the doctors give suggestions to each other, and fetches the same kind of evidence from clinical literature to support the AI’s suggestion,” Yang said.

The AI tool Yang’s team created is based on GPT-3, an older large language model that once powered OpenAI’s ChatGPT. The tool’s interface is fairly straightforward: on one side, it provides the AI’s suggestions. The other side contrasts this with relevant biomedical literature the AI gleaned, plus brief summaries of each study and other helpful nuggets of information like patient outcomes.

So far, the team has developed their tool with three different medical specializations: neurology, psychiatry, and palliative care. When the doctors tried the versions tailored to their respective field, they told the researchers that they liked the presentation of the medical literature, and affirmed they preferred it to an explanation of how the AI worked.

Either way, this specialized AI seems to be faring better than ChatGPT’s attempt of playing the doctor in a larger study, which found that 60 percent of its answers to real medical scenarios disagreed with human experts’ opinions or were too irrelevant to be helpful.

But the jury is still out on how the Cornell researchers’ AI would hold up when subjected to a similar analysis.

Overall, it’s worth noting that while these tools may be helpful to doctors who have years of expertise to inform their decisions, we’re still a very long way out from an “AI medical advisor” that can replace them.

News

Nano-Enhanced Hydrogel Strategies for Cartilage Repair

A recent article in Engineering describes the development of a protein-based nanocomposite hydrogel designed to deliver two therapeutic agents—dexamethasone (Dex) and kartogenin (KGN)—to support cartilage repair. The hydrogel is engineered to modulate immune responses and promote [...]

New Cancer Drug Blocks Tumors Without Debilitating Side Effects

A new drug targets RAS-PI3Kα pathways without harmful side effects. It was developed using high-performance computing and AI. A new cancer drug candidate, developed through a collaboration between Lawrence Livermore National Laboratory (LLNL), BridgeBio Oncology [...]

Scientists Are Pretty Close to Replicating the First Thing That Ever Lived

For 400 million years, a leading hypothesis claims, Earth was an “RNA World,” meaning that life must’ve first replicated from RNA before the arrival of proteins and DNA. Unfortunately, scientists have failed to find [...]

Why ‘Peniaphobia’ Is Exploding Among Young People (And Why We Should Be Concerned)

An insidious illness is taking hold among a growing proportion of young people. Little known to the general public, peniaphobia—the fear of becoming poor—is gaining ground among teens and young adults. Discover the causes [...]

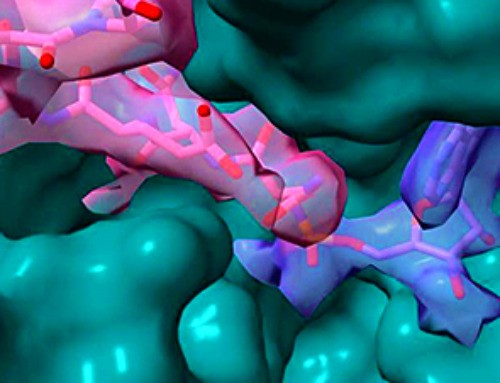

Team finds flawed data in recent study relevant to coronavirus antiviral development

The COVID pandemic illustrated how urgently we need antiviral medications capable of treating coronavirus infections. To aid this effort, researchers quickly homed in on part of SARS-CoV-2's molecular structure known as the NiRAN domain—an [...]

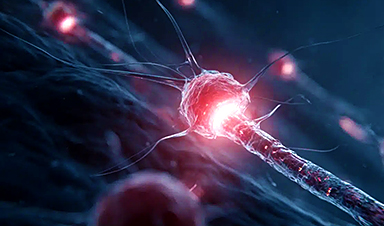

Drug-Coated Neural Implants Reduce Immune Rejection

Summary: A new study shows that coating neural prosthetic implants with the anti-inflammatory drug dexamethasone helps reduce the body’s immune response and scar tissue formation. This strategy enhances the long-term performance and stability of electrodes [...]

Scientists discover cancer-fighting bacteria that ‘soak up’ forever chemicals in the body

A family of healthy bacteria may help 'soak up' toxic forever chemicals in the body, warding off their cancerous effects. Forever chemicals, also known as PFAS (per- and polyfluoroalkyl substances), are toxic chemicals that [...]

Johns Hopkins Researchers Uncover a New Way To Kill Cancer Cells

A new study reveals that blocking ribosomal RNA production rewires cancer cell behavior and could help treat genetically unstable tumors. Researchers at the Johns Hopkins Kimmel Cancer Center and the Department of Radiation Oncology and Molecular [...]

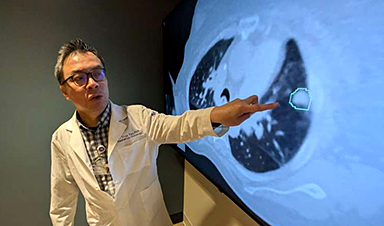

AI matches doctors in mapping lung tumors for radiation therapy

In radiation therapy, precision can save lives. Oncologists must carefully map the size and location of a tumor before delivering high-dose radiation to destroy cancer cells while sparing healthy tissue. But this process, called [...]

Scientists Finally “See” Key Protein That Controls Inflammation

Researchers used advanced microscopy to uncover important protein structures. For the first time, two important protein structures in the human body are being visualized, thanks in part to cutting-edge technology at the University of [...]

AI tool detects 9 types of dementia from a single brain scan

Mayo Clinic researchers have developed a new artificial intelligence (AI) tool that helps clinicians identify brain activity patterns linked to nine types of dementia, including Alzheimer's disease, using a single, widely available scan—a transformative [...]

Is plastic packaging putting more than just food on your plate?

New research reveals that common food packaging and utensils can shed microscopic plastics into our food, prompting urgent calls for stricter testing and updated regulations to protect public health. Beyond microplastics: The analysis intentionally [...]

Aging Spreads Through the Bloodstream

Summary: New research reveals that aging isn’t just a local cellular process—it can spread throughout the body via the bloodstream. A redox-sensitive protein called ReHMGB1, secreted by senescent cells, was found to trigger aging features [...]

AI and nanomedicine find rare biomarkers for prostrate cancer and atherosclerosis

Imagine a stadium packed with 75,000 fans, all wearing green and white jerseys—except one person in a solid green shirt. Finding that person would be tough. That's how hard it is for scientists to [...]

Are Pesticides Breeding the Next Pandemic? Experts Warn of Fungal Superbugs

Fungicides used in agriculture have been linked to an increase in resistance to antifungal drugs in both humans and animals. Fungal infections are on the rise, and two UC Davis infectious disease experts, Dr. George Thompson [...]

Scientists Crack the 500-Million-Year-Old Code That Controls Your Immune System

A collaborative team from Penn Medicine and Penn Engineering has uncovered the mathematical principles behind a 500-million-year-old protein network that determines whether foreign materials are recognized as friend or foe. How does your body [...]