One question has relentlessly followed ChatGPT in its trajectory to superstar status in the field of artificial intelligence: Has it met the Turing test of generating output indistinguishable from human response?

ChatGPT may be smart, quick and impressive. It does a good job at exhibiting apparent intelligence. It sounds humanlike in conversations with people and can even display humor, emulate the phraseology of teenagers, and pass exams for law school.

But on occasion, it has been found to serve up totally false information. It hallucinates. It does not reflect on its own output.

Cameron Jones, who specializes in language, semantics and machine learning, and Benjamin Bergen, professor of cognitive science, drew upon the work of Alan Turing, who 70 years ago devised a process to determine whether a machine could reach a point of intelligence and conversational prowess at which it could fool someone into thinking it was human.

Their report titled “Does GPT-4 Pass the Turing Test?” is available on the arXiv preprint server.

They rounded up 650 participants and generated 1,400 “games” in which brief conversations were conducted between participants and either another human or a GPT model. Participants were asked to determine who they were conversing with.

The researchers found that GPT-4 models fooled participants 41% of the time, while GPT-3.5 fooled them only 5% to 14% of the time. Interestingly, humans succeeded in convincing participants they were not machines in only 63% of the trials.

The researchers concluded, “We do not find evidence that GPT-4 passes the Turing Test.”

They noted, however, that the Turing test still retains value as a measure of the effectiveness of machine dialogue.

“The test has ongoing relevance as a framework to measure fluent social interaction and deception, and for understanding human strategies to adapt to these devices,” they said.

They warned that in many instances, chatbots can still communicate convincingly enough to fool users in many instances.

“A success rate of 41% suggests that deception by AI models may already be likely, especially in contexts where human interlocutors are less alert to the possibility they are not speaking to a human,” they said. “AI models that can robustly impersonate people could have could have widespread social and economic consequences.”

The researchers observed that participants making correct identifications focused on several factors.

Models that were too formal or too informal raised red flags for participants. If they were too wordy or too brief, if their grammar or use of punctuation was exceptionally good or “unconvincingly” bad, their usage became key factors in determining whether participants were dealing with humans or machines.

Test takers also were sensitive to generic-sounding responses.

“LLMs learn to produce highly likely completions and are fine-tuned to avoid controversial opinions. These processes might encourage generic responses that are typical overall, but lack the idiosyncrasy typical of an individual: a sort of ecological fallacy,” the researchers said.

The researchers have suggested that it will be important to track AI models as they gain more fluidity and absorb more humanlike quirks in conversation.

“It will become increasingly important to identify factors that lead to deception and strategies to mitigate it,” they said.

News

Team finds flawed data in recent study relevant to coronavirus antiviral development

The COVID pandemic illustrated how urgently we need antiviral medications capable of treating coronavirus infections. To aid this effort, researchers quickly homed in on part of SARS-CoV-2's molecular structure known as the NiRAN domain—an [...]

Drug-Coated Neural Implants Reduce Immune Rejection

Summary: A new study shows that coating neural prosthetic implants with the anti-inflammatory drug dexamethasone helps reduce the body’s immune response and scar tissue formation. This strategy enhances the long-term performance and stability of electrodes [...]

Scientists discover cancer-fighting bacteria that ‘soak up’ forever chemicals in the body

A family of healthy bacteria may help 'soak up' toxic forever chemicals in the body, warding off their cancerous effects. Forever chemicals, also known as PFAS (per- and polyfluoroalkyl substances), are toxic chemicals that [...]

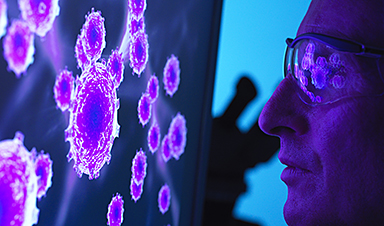

Johns Hopkins Researchers Uncover a New Way To Kill Cancer Cells

A new study reveals that blocking ribosomal RNA production rewires cancer cell behavior and could help treat genetically unstable tumors. Researchers at the Johns Hopkins Kimmel Cancer Center and the Department of Radiation Oncology and Molecular [...]

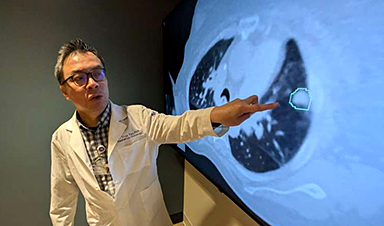

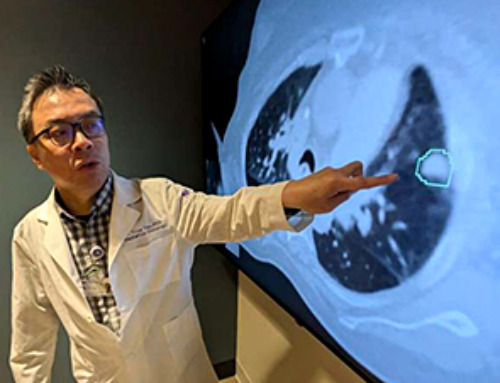

AI matches doctors in mapping lung tumors for radiation therapy

In radiation therapy, precision can save lives. Oncologists must carefully map the size and location of a tumor before delivering high-dose radiation to destroy cancer cells while sparing healthy tissue. But this process, called [...]

Scientists Finally “See” Key Protein That Controls Inflammation

Researchers used advanced microscopy to uncover important protein structures. For the first time, two important protein structures in the human body are being visualized, thanks in part to cutting-edge technology at the University of [...]

AI tool detects 9 types of dementia from a single brain scan

Mayo Clinic researchers have developed a new artificial intelligence (AI) tool that helps clinicians identify brain activity patterns linked to nine types of dementia, including Alzheimer's disease, using a single, widely available scan—a transformative [...]

Is plastic packaging putting more than just food on your plate?

New research reveals that common food packaging and utensils can shed microscopic plastics into our food, prompting urgent calls for stricter testing and updated regulations to protect public health. Beyond microplastics: The analysis intentionally [...]

Aging Spreads Through the Bloodstream

Summary: New research reveals that aging isn’t just a local cellular process—it can spread throughout the body via the bloodstream. A redox-sensitive protein called ReHMGB1, secreted by senescent cells, was found to trigger aging features [...]

AI and nanomedicine find rare biomarkers for prostrate cancer and atherosclerosis

Imagine a stadium packed with 75,000 fans, all wearing green and white jerseys—except one person in a solid green shirt. Finding that person would be tough. That's how hard it is for scientists to [...]

Are Pesticides Breeding the Next Pandemic? Experts Warn of Fungal Superbugs

Fungicides used in agriculture have been linked to an increase in resistance to antifungal drugs in both humans and animals. Fungal infections are on the rise, and two UC Davis infectious disease experts, Dr. George Thompson [...]

Scientists Crack the 500-Million-Year-Old Code That Controls Your Immune System

A collaborative team from Penn Medicine and Penn Engineering has uncovered the mathematical principles behind a 500-million-year-old protein network that determines whether foreign materials are recognized as friend or foe. How does your body [...]

Team discovers how tiny parts of cells stay organized, new insights for blocking cancer growth

A team of international researchers led by scientists at City of Hope provides the most thorough account yet of an elusive target for cancer treatment. Published in Science Advances, the study suggests a complex signaling [...]

Nanomaterials in Ophthalmology: A Review

Eye diseases are becoming more common. In 2020, over 250 million people had mild vision problems, and 295 million experienced moderate to severe ocular conditions. In response, researchers are turning to nanotechnology and nanomaterials—tools that are transforming [...]

Natural Plant Extract Removes up to 90% of Microplastics From Water

Researchers found that natural polymers derived from okra and fenugreek are highly effective at removing microplastics from water. The same sticky substances that make okra slimy and give fenugreek its gel-like texture could help [...]

Instant coffee may damage your eyes, genetic study finds

A new genetic study shows that just one extra cup of instant coffee a day could significantly increase your risk of developing dry AMD, shedding fresh light on how our daily beverage choices may [...]