While AI models can break down problems into structured steps, new research reveals they still fail at basic arithmetic and fact-checking—raising questions about their true reasoning abilities.

Large Language Models (LLMs) have become indispensable in natural language processing, excelling at tasks such as sentiment analysis, reading comprehension, and answering factual questions. However, their ability to perform complex, multi-step reasoning remains a significant challenge, particularly in question-answering tasks that demand logical inference rather than simple recall. This study, authored by Nick Ferguson, Liane Guillou, Alan Bundy, and Kwabena Nuamah from the University of Edinburgh and Aveni, examines the extent to which LLMs can engage in two distinct forms of reasoning: meta-level and object-level reasoning.

Understanding Meta-Level and Object-Level Reasoning

Meta-level reasoning involves high-level strategic thinking, including problem decomposition and the formulation of intermediate steps necessary to solve a question. Object-level reasoning, in contrast, refers to the execution of these steps, such as performing mathematical calculations, retrieving specific facts, or applying symbolic logic. To evaluate the capabilities of LLMs in these areas, the authors introduce FRANKLIN, a novel dataset that explicitly requires models to engage in both reasoning types. FRANKLIN is inspired by the FRANK system, a symbolic reasoning framework for question answering, and focuses on geopolitical indicators such as population trends, economic metrics, and regional comparisons. Alongside three established multi-step question-answering datasets, FRANKLIN serves as a benchmark for testing the performance of four specific LLM versions: Meta’s Llama 3.1 8B, Microsoft’s Phi 3.5 Mini, Google’s Gemma 2 9B, and OpenAI’s GPT-4o-mini. Through two human annotation studies, the researchers assess whether LLMs can successfully generate reasoned responses and whether prompting them to plan their answers before execution improves their performance.

How LLMs Approach Reasoning Tasks

The study situates its analysis within the broader context of LLM reasoning tasks. As a cognitive function, reasoning encompasses logical deduction, belief revision, and inference-making. Common sense reasoning requires an understanding of everyday concepts and the ability to infer implicit knowledge. Mathematical reasoning demands numerical operations and logical problem-solving, while symbolic reasoning involves rule-based manipulations, such as emulating formal logic or deducing relationships between abstract entities. Multi-step reasoning is particularly significant, as it necessitates the sequential application of inference processes to arrive at a final answer. Despite their advancements, LLMs often struggle with these tasks because they rely on statistical pattern-matching rather than genuine logical deduction.

Existing techniques attempt to improve LLM performance on reasoning tasks. Fine-tuning involves additional training on domain-specific datasets to enhance accuracy in particular tasks while prompting techniques such as Chain-of-Thought (CoT) to introduce explicit reasoning steps into model responses. These approaches have demonstrated improvements, yet doubts remain as to whether LLMs are genuinely reasoning or merely imitating structured thought patterns learned from their training data. The authors propose a more structured classification of LLM reasoning, distinguishing between meta-level and object-level processes. While meta-level reasoning involves planning, selecting relevant knowledge sources, and determining the steps required to solve a problem, object-level reasoning focuses on accurate execution, including factual retrieval, numerical precision, and logical deductions.

FRANKLIN Dataset: A New Challenge for LLMs

To assess these reasoning types, the study introduces the FRANKLIN dataset, inspired by the FRANK system, which employs explicit symbolic reasoning to solve complex questions. FRANKLIN consists of complex questions requiring both meta- and object-level reasoning, particularly in the domain of geopolitical indicators. It includes scenarios requiring future prediction, regional comparisons, historical trends, and projections. Unlike more straightforward fact-retrieval datasets, FRANKLIN forces LLMs to not only determine the correct problem-solving approach but also accurately retrieve and manipulate relevant data. Each question is paired with a detailed explanation outlining the necessary reasoning steps. This dataset poses a significant challenge for LLMs, as it requires them not only to determine the appropriate strategy for answering a question but also to accurately retrieve and manipulate data.

How LLMs Were Evaluated: Two Human Annotation Studies

The evaluation design consists of two human annotation studies. In the first, LLMs were prompted to directly answer questions, allowing assessment of their object-level reasoning abilities. In the second, models were first asked to generate a plan before executing their reasoning steps, testing their meta-level reasoning skills. Participants rated responses based on their coherence, correctness, and the presence of structured reasoning. The study also introduced three key evaluation metrics:

- Answer Failure Rate (AFR) – the percentage of cases where an LLM provided no attempted answer.

- Rational Approach Rate (RAR) – the proportion of responses that outlined a coherent problem-solving approach.

- Plan Creation Rate (PCR) – the percentage of responses that structured their reasoning in a clear, step-by-step manner.

The results reveal a clear divergence in LLM performance between these two reasoning levels.

Key Findings: Meta-Level Strength, Object-Level Weakness

Across all datasets, LLMs consistently demonstrated strong meta-level reasoning. Responses often contained structured, step-by-step explanations that human annotators rated as rational and interpretable. Even for complex questions in FRANKLIN, models exhibited an ability to break down problems into intermediate steps and articulate a plan for solving them. However, while these responses appeared structured, the study raises concerns about whether they represent true reasoning or simply an imitation of learned patterns.

In contrast, LLMs struggled significantly with object-level reasoning. Object-level reasoning failures were frequent, particularly when questions required numerical precision or factual recall. In FRANKLIN, for example, models frequently fabricated numerical data, provided incorrect values, or made basic arithmetic errors. Even when models successfully identified the correct reasoning path, they often failed to follow through with accurate computations or fact retrieval. Error patterns included:

- Fabricating numerical data (e.g., citing non-existent sources).

- Retrieving inaccurate or imprecise information (e.g., rounding values incorrectly).

- Performing incorrect calculations (even for simple arithmetic operations).

A closer analysis of errors highlights the nature of these failures. Some responses contained entirely fabricated data, where models cited non-existent sources or invented statistical figures. Others retrieved information with reduced precision, rounding values or omitting key details necessary for accurate comparisons. In mathematical tasks, models often produce incorrect calculations, even for simple operations. These findings suggest that while LLMs can structure their responses in a way that appears logical, they lack the robust execution skills necessary to reliably generate correct answers in domains requiring object-level reasoning.

Implications for LLM Development

The findings have significant implications for the development of LLMs. While prompting models to engage in meta-level reasoning improves their ability to articulate coherent strategies, it does not address their deficiencies in object-level reasoning. This suggests that future advancements must focus on integrating external symbolic reasoning components, improving factual retrieval mechanisms, and refining numerical processing capabilities. The FRANKLIN dataset serves as a critical benchmark, demonstrating that even models with strong problem-decomposition skills struggle with execution.

Conclusion: The Path Forward for AI Reasoning

In conclusion, the study highlights a critical distinction in the reasoning capabilities of LLMs. While they can effectively plan and structure problem-solving approaches, their ability to execute complex reasoning tasks remains limited. The study’s findings emphasize that LLMs are proficient at mimicking reasoning structures but not necessarily reasoning in a human-like, cognitive sense. The introduction of FRANKLIN offers a new means of evaluating these deficiencies, laying the groundwork for further research into improving LLM performance in multi-step question answering. The results underscore the need for continued refinement in how LLMs handle object-level reasoning, ensuring that future iterations can move beyond surface-level imitation and towards genuine cognitive reasoning abilities.

- Preliminary scientific report. Ferguson, N., Guillou, L., Bundy, A., & Nuamah, K. (2025). Evaluating the Meta- and Object-Level Reasoning of Large Language Models for Question Answering. ArXiv. https://arxiv.org/abs/2502.10338

News

Scientists Rewire Natural Killer Cells To Attack Cancer Faster and Harder

Researchers tested new CAR designs in NK-92 cells and found the modified cells killed tumor cells more effectively, showing stronger anti-cancer activity. Researchers at the Ribeirão Preto Blood Center and the Center for Cell-Based [...]

New “Cellular” Target Could Transform How We Treat Alzheimer’s Disease

A new study from researchers highlights an unexpected player in Alzheimer’s disease: aging astrocytes. Senescent astrocytes have been identified as a major contributor to Alzheimer’s progression. The cells lose protective functions and fuel inflammation, particularly in [...]

Treating a Common Dental Infection… Effects That Extend Far Beyond the Mouth

Successful root canal treatment may help lower inflammation associated with heart disease and improve blood sugar and cholesterol levels. Treating an infected tooth with a successful root canal procedure may do more than relieve [...]

Microplastics found in prostate tumors in small study

In a new study, researchers found microplastics deep inside prostate cancer tumors, raising more questions about the role the ubiquitous pollutants play in public health. The findings — which come from a small study of 10 [...]

All blue-eyed people have this one thing in common

All Blue-Eyed People Have This One Thing In Common Blue Eyes Aren’t Random—Research Traces Them Back to One Prehistoric Human It sounds like a myth at first — something you’d hear in a folklore [...]

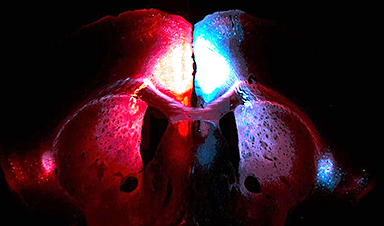

Scientists reveal how exercise protects the brain from Alzheimer’s

Researchers at UC San Francisco have identified a biological process that may explain why exercise sharpens thinking and memory. Their findings suggest that physical activity strengthens the brain's built in defense system, helping protect [...]

NanoMedical Brain/Cloud Interface – Explorations and Implications. A new book from Frank Boehm

New book from Frank Boehm, NanoappsMedical Inc Founder: This book explores the future hypothetical possibility that the cerebral cortex of the human brain might be seamlessly, safely, and securely connected with the Cloud via [...]

Deadly Pancreatic Cancer Found To “Wire Itself” Into the Body’s Nerves

A newly discovered link between pancreatic cancer and neural signaling reveals a promising drug target that slows tumor growth by blocking glutamate uptake. Pancreatic cancer is among the most deadly cancers, and scientists are [...]

This Simple Brain Exercise May Protect Against Dementia for 20 Years

A long-running study following thousands of older adults suggests that a relatively brief period of targeted brain training may have effects that last decades. Starting in the late 1990s, close to 3,000 older adults [...]

Scientists Crack a 50-Year Tissue Mystery With Major Cancer Implications

Researchers have resolved a 50-year-old scientific mystery by identifying the molecular mechanism that allows tissues to regenerate after severe damage. The discovery could help guide future treatments aimed at reducing the risk of cancer [...]

This New Blood Test Can Detect Cancer Before Tumors Appear

A new CRISPR-powered light sensor can detect the faintest whispers of cancer in a single drop of blood. Scientists have created an advanced light-based sensor capable of identifying extremely small amounts of cancer biomarkers [...]

Blindness Breakthrough? This Snail Regrows Eyes in 30 Days

A snail that regrows its eyes may hold the genetic clues to restoring human sight. Human eyes are intricate organs that cannot regrow once damaged. Surprisingly, they share key structural features with the eyes [...]

This Is Why the Same Virus Hits People So Differently

Scientists have mapped how genetics and life experiences leave lasting epigenetic marks on immune cells. The discovery helps explain why people respond so differently to the same infections and could lead to more personalized [...]

Rejuvenating neurons restores learning and memory in mice

EPFL scientists report that briefly switching on three “reprogramming” genes in a small set of memory-trace neurons restored memory in aged mice and in mouse models of Alzheimer’s disease to level of healthy young [...]

New book from Nanoappsmedical Inc. – Global Health Care Equivalency

A new book by Frank Boehm, NanoappsMedical Inc. Founder. This groundbreaking volume explores the vision of a Global Health Care Equivalency (GHCE) system powered by artificial intelligence and quantum computing technologies, operating on secure [...]

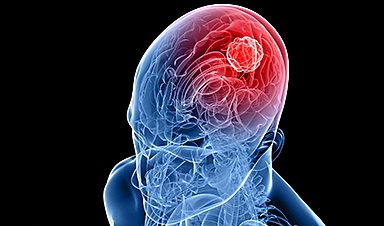

New Molecule Blocks Deadliest Brain Cancer at Its Genetic Root

Researchers have identified a molecule that disrupts a critical gene in glioblastoma. Scientists at the UVA Comprehensive Cancer Center say they have found a small molecule that can shut down a gene tied to glioblastoma, a [...]