An Historical Overview of the Philosophy of Artificial Intelligence

by Anton Vokrug

Many famous people in the philosophy of technology have tried to comprehend the essence of technology and link it to society and human experience. In the first half of the 20th century, the results of their analyses mainly showed a divergence between technology and human life.

Technology was regarded as an autonomous force that crushed the basic parts of humanity. By bringing the concept of technology down to historical and transcendental assumptions, philosophers seemed to abstract from the impact of specific events.

PHILOSOPHY OF SUBJECTIVITY OF ARTIFICIAL INTELLIGENCE

In the eighties, a more empirical view of technology developed, based on the ideas of American philosophers who integrated the impact of specific technologies into their views (Achterhuis, H.J., “Van Stoommachine tot cyborg; denken over techniek in de nieuwe wereld”, 1997). The interdependence of technology and society is the main topic of this study. This “empirical turn” made it possible to explain the versatility of technology and the many roles it can play in society. This approach was further developed among philosophers of technology, for example, at the University of Twente.

Artificial intelligence was established as a field of research in 1956. It is concerned with intelligent behavior in computing machines. The purposes of the research can be divided into four categories:

- Systems that think like humans.

- Systems that think rationally.

- Systems that act like humans.

- Systems that act rationally.

After many years of optimism about the ability to accomplish these tasks, the field faced challenges in how to represent intelligence that could be useful in applications. These included a lack of basic knowledge, the complexity of computation, and limitations in knowledge representation structures (Russell, S & Norvig, “Artificial Intelligence: A Modern Approach”, Peter, 2009). But the challenges came not only from the design community. Philosophers, who since the time of Plato had been concerned with the mind and reasoning, also started to complain. Using both mathematical objections (based on Turing and Gödel) and more philosophical arguments about the nature of human intelligence, they tried to show the internal restrictions of the AI project. The most famous of them was Hubert Dreyfus.

Hubert Dreyfus on Artificial Intelligence

Dreyfus saw the goals and methods of artificial intelligence as a clear rationalist view of intelligence. This has been defended by many rationalist philosophers throughout history, but Dreyfus himself was more of a proponent of 20th-century anti-rationalist philosophy, as can be seen in the works of Heidegger, Merleau-Ponty, and Wittgenstein. According to Dreyfus, the most fundamental way of cognition is intuitive, not rational. Gaining experience in a certain field, a person becomes attached to formalized rules only during the first study of argumentation. After that, intelligence is more likely to be represented by empirical rules and intuitive decisions.

The rational approach of AI can be clearly traced in the foundations of what is called symbolic AI. Intelligent processes are viewed as a form of information processing, and the representation of this information is symbolic. Thus, intelligence is more or less limited to the manipulation of symbols. Dreyfus analyzed this as a combination of three fundamental assumptions:

- A psychological assumption that human intelligence is based on rules of symbol manipulation.

- An epistemological assumption is that all knowledge is formalized.

- An ontological assumption is that reality has a formalized structure.

Dreyfus not only criticized these assumptions but also defined some concepts that he believed were essential to intelligence. According to Dreyfus, intelligence is embodied and located. The embodiment is difficult to explain as it is unclear whether this means that intelligence requires a body or whether it can only develop with the help of a body. But at least it is clear that Dreyfus considers intelligence to depend on the situation in which the intellectual agent is located and the elements are in a meaningful relation to their context. This prevents reality from being reduced to formalized entities. Dreyfus’s point of view makes the operation of machines that manipulate symbols beyond a clearly defined formal area impossible.

Dreyfus has a more positive attitude towards the connectionist approach to artificial intelligence. This approach sees intelligent behavior emerging from modeled structures that resemble neurons and their connections in the human brain. But he doubts that the complexity of the human brain is ever possible in such machines.

Thus, Dreyfus initiated a discussion about the feasibility of AI goals. His work attracted a lot of attention and stirred up heated debate. He even managed to make some researchers change their point of view and start implementing systems that would be more compatible with his vision. Dreyfus demonstrated the assumptions made by symbolic AI and clarified that it is by no means obvious that these assumptions will result in real intelligent machines (Mind Over Machine: The Power of Human Intuition and Expertise in the Era of the Computer).

However, two remarks should be made. First, Dreyfus based his criticism on strict symbolic AI approaches. In recent decades, there have been several attempts to create more hybrid intelligent systems and implement non-rules-based methods in symbolic AI. These systems put forward a different view of intelligence that cannot be fully explained by Dreyfus’ analysis. Second, Dreyfus’s criticism seems to be based on a skeptical view of artificial intelligence, partly because of his own philosophical background and partly because the foundations were established at a time when enthusiasm was almost unlimited.

Free will is a strange concept. Philosophy can discuss the human mind in many ways, but when it comes to the question of whether we are free in our decisions, the discussion becomes dangerous. We are so familiar with thinking in terms of will, decisions, and actions that we mostly refuse to even consider the possibility that we are not free in our choices. But there is something else. What if I were to say during such a discussion that there is no free will of humans at all? If it is false, I am wrong, and if it is true, then the whole remark loses its meaning because I could do nothing but say that. The denial of free will is a pragmatic contradiction. You cannot deny a person’s free will without making this denial meaningless.

Nevertheless, the question of free will seems to be relevant, since scientific theories can claim that everything that happens follows the laws of nature. So, we either need to grant people special properties or deny that the laws of nature are determined if we do not want to be determined organic machines. The first option is related to many philosophical theories, but most of all to the theory of Descartes, who divides the world into two substances (spirit and matter) that are connected in humans. The second option opens up a more holistic vision that uses the latest developments in physics (relativity, quantum mechanics) to show that our free will can be based on the unpredictable dynamics of nature.

The dualistic view of Descartes and others denies the existence of free will for things other than humans. Therefore, the discussion about free will and intelligent machines is not particularly interesting. On the other hand, the holistic view is more suitable for such a discussion, but it is difficult to come to any other conclusions than the physical assumptions required to assign the property of free will to humans or computers. This may be appropriate in a pure philosophical discussion, but has little to do with computer science.

There is also the possibility of recognizing that human nature is inherently contradictory, as both deterministic and free will views are justified and necessary. This dialectical approach enables us to think about free will in humans without worrying about physical presuppositions. Free will becomes a transcendent presupposition of being human. However, the transcendental view of free will in this approach does not allow for the discussion of free will in specific artifacts such as intelligent machines since it is impossible to model or design transcendental presuppositions. Further in this section, I will transform the complex concept of free will into a concept that can be used to analyze intelligent machines. This concept should be compatible with an empirical approach in the philosophy of technology. Therefore, we should avoid talking about the concept of free will in terms of physical or transcendental presuppositions but rather focus on the role that this concept plays in society.

The hint to my approach in this article can be found in the introductory paragraph. My view of the debate on free will is that there are two fundamentally different approaches to any research in this field. The first one focuses on deep philosophical issues of the nature of free will and the ability of people to avoid the “demands” of nature. I will call it the physical approach. In the article about intelligent machines, this leads to a philosophical debate that is focused on the nature of humans rather than so much on the nature of computers, because we get into a position where we have to defend our own will anyway and say something about computers because we just wanted to write an article about it. In other words, the discussion turns into a comparison between humans and computers, in which neither humans nor computers can recognize themselves.

Another approach subtly suggested in the first paragraph of this section, focuses on the impossibility of denying our own free will. As mentioned before, this denial makes no sense. But it not only devalues itself, it also destroys the foundations of responsibility as a whole. This means that we cannot praise or blame people for what they say or do, so we have to reconsider the principles of jurisdiction, work, friendship, love, and everything we have built our society on. All of these social problems require a choice, and whenever it comes to choices, the concept of free will is essential. The essence of this is that free will is an important presumption for our society, regardless of whether it is physically justified. I will call this the social approach.

It is a difficult question whether the presumption of free will is necessary only for our society or for any human society. I will examine this question anyway, as it may provide a more philosophical justification for the importance of free will than simply pointing to the structure of our own society. It seems impossible to answer without reconsidering human nature and thus reintroducing a physical approach to free will. But when we state that interaction is the core of human civilization, the need for the concept of free will arises naturally. We cannot interact with people without assuming that they are free to influence the course of the interaction, since any human interaction implies that we do not know the outcome in advance. So, interactions are characterized by choice and thus by the concept of free will. If interactions are fundamental in any society, we also have to state that free will cannot be denied in any society.

Different Approaches to the Philosophy of Artificial Intelligence

Symbolic artificial intelligence (AI) is a subfield of AI that focuses on processing and manipulating symbols or concepts rather than numerical data. The aim of symbolic artificial intelligence is to create intelligent systems that can reason and think like humans by representing and manipulating knowledge and reasoning based on logical rules.

Algorithms for symbolic artificial intelligence work by processing symbols that represent objects or concepts in the world and their connections. The main approach in symbolic artificial intelligence is to use logic programming, where rules and axioms are used to draw conclusions. For example, we have a symbolic artificial intelligence system that is designed to diagnose diseases based on symptoms reported by a patient. The system has a set of rules and axioms that it uses to draw conclusions about the patient’s condition.

For instance, if a patient reports a fever, the system may apply the following rule: IF the patient has a fever AND he/she is coughing AND he/she is having difficulty breathing, THEN the patient may have pneumonia.

Then the system will check whether the patient also has a cough and difficulty breathing, and if so, it will conclude that the patient may have pneumonia.

This approach is very easy to interpret because we can easily trace the reasoning process back to the logical rules that were applied. It also makes it easy to change and update the rules of the system as new information becomes available.

Symbolic AI uses formal languages, such as logic, to represent knowledge. This knowledge is processed by reasoning mechanisms that use algorithms to manipulate symbols. This allows for the creation of expert systems and decision support systems that can draw conclusions based on predefined rules and knowledge.

Symbolic artificial intelligence differs from other AI methods, such as machine and deep learning, as it does not require large amounts of training data. Instead, symbolic AI is based on knowledge representation and reasoning, which makes it more suitable for fields where knowledge is clearly defined and can be represented in logical rules.

On the other hand, machine learning requires large data sets to learn patterns and make predictions. Deep learning uses neural networks to learn features directly from data which makes it suitable for domains with complex and unstructured data.

It depends on the subject area and the data available when to apply each technique. Symbolic artificial intelligence is suitable for areas with well-defined and structured knowledge, while machine and deep learning are suitable for areas with large amounts of data and complex patterns.

The connectionist approach to artificial intelligence philosophy is based on the principles of neural networks and their similarity to the human brain. This approach is intended to imitate the behavior of interconnected neurons in biological systems to process information and learn from data. Here are some key aspects of the connectionist approach.

The connectionist approach involves the creation of artificial neural networks consisting of interconnected nodes, often referred to as artificial neurons or nodes. These artificial neurons are designed to receive input data, perform computations, and transmit signals to other neurons in the network.

The connectionist approach assumes that artificial neurons in a network work together to process information. Each neuron receives input signals, performs calculations based on them, and transmits output signals to other neurons. The output of the network is determined by the collective activity of its neurons, while information flows through the connections between them. An important aspect of the connectionist approach is the ability of artificial neural networks to learn from data. During the learning process, the network adjusts the strength of connections (weights) between neurons based on the input data and the desired outcome. Based on the iterative comparison of the predicted output of the network with the expected outcome, the weights are updated to minimize the differences and improve the network performance.

Connectionist systems highlight parallel processing, where several computations are performed simultaneously on the network. This ensures efficient and reliable information processing. In addition, connectionist models use a distributed representation, meaning that information is encoded in multiple neurons rather than being localized in a single location. This distributed representation enables the network to process complex patterns and summarise based on limited examples.

The connectionist approach is the foundation of deep learning, a subfield of artificial intelligence that focuses on training deep neural networks with several layers. Deep learning models have been extremely successful in various fields such as computer vision, natural language processing, and speech recognition. They have demonstrated the ability to automatically learn hierarchical data representations, which provides advanced performance in complex tasks.

In general, the connectionist approach to artificial intelligence philosophy highlights the use of artificial neural networks to imitate the cooperative and parallel nature of human brain processing. By learning from data using weight adjustments, connectionist systems have proven to be highly effective in solving complex problems and achieving impressive results in AI applications.

A neural network is a computational model inspired by the structure and functioning of biological neural networks such as the human brain. It is a mathematical structure composed of interconnected nodes (artificial neurons) arranged in layers. Neural networks are intended to process and learn data, allowing them to recognize patterns, make predictions and perform various tasks.

Artificial neurons are the basic units of a neural network. Each neuron receives one or more input data, performs calculations on them, and produces output data. The output data are usually transmitted to other neurons in the network.

The neurons in a neural network are connected to each other through connections that represent the flow of information between them. Each connection is related to a weight that determines the strength or importance of the signal being transmitted. Weight factors are adjusted during the learning process to optimize network performance.

Neural networks are usually arranged in layers. The input layer receives the initial data, while the output layer produces the final result or prediction, and there may be one or more hidden layers in between. The hidden layers allow the network to learn complex representations by transforming and combining input information.

Each neuron applies an activation function to a weighted total of its input data to produce an output signal. The activation function brings non-linearity to the network, allowing it to model complex connections and make non-linear predictions.

Neural networks process data based on the feedforward principle. Input data pass through the network layer by layer, with calculations performed on each neuron. The output of one layer serves as input to the next layer until the final result is generated.

Neural networks learn data through a process called training. During training, the input data are presented to the network along with the corresponding desired outputs. By comparing its predictions with the desired outcomes, the weights of the network are adjusted using algorithms such as gradient descent and backpropagation. This iterative process allows the network to minimize the difference between its predictions and the expected outcomes.

Deep neural networks (DNNs) refer to neural networks with several hidden layers. Deep learning, which focuses on training deep neural networks, has attracted considerable attention in recent years due to its ability to automatically learn hierarchical representations and extract complex patterns from data.

Neural networks have been very successful in a variety of areas, including image recognition, natural language processing, speech synthesis, and more. They are capable of processing large amounts of data, summarising based on examples, and performing complex computations, which makes them a powerful tool in the field of artificial intelligence.

Philosophical Issues and Debates in the Field of Artificial Intelligence

“No one has the slightest idea of how something material can be conscious. No one even knows what it would be like to have the slightest idea of how something material can be conscious.” (Jerry Fodor, Ernest Lepore, “Holism: a Shopper’s Guide”, Blackwell, 1992). Jerry Fodor is credited with these words, and I believe they explain all the difficulties I have faced in trying to figure out how a machine can be conscious. However, these words do not encourage me to abandon my attempts to claim that a machine with artificial consciousness can be created. In fact, they do the opposite; they encourage me to think that if we (material beings) can be conscious, then consciousness must be a material thing and, therefore, in theory, it can be created artificially.

The key point in consciousness is that this is not one thing. It is a set of polymorphic concepts, all of which are mixed up in different ways. So, it is difficult to untangle them all and try to explain each one separately. It is important to keep this in mind because, while I do my best to explain some aspects of it, their interconnection makes it difficult. In my conclusions, I attempt to combine all of these concepts to justify the feasibility of strong artificial consciousness in a virtual machine.

In general, artificial consciousness (hereinafter referred to as AC) is divided into two parts: weak AC and strong AC. Weak AC is “a simulation of conscious behavior”. It can be implemented as an intelligent program that simulates the behavior of a conscious being at a certain level of detail without understanding the mechanisms generating consciousness. A strong AC is “real conscious thinking that comes from a sophisticated computing machine (artificial brain). In this case, the main difference from its natural equivalent depends on the hardware that generates the process.” However, there are some scholars, like Chrisley, who argue that there are many intermediate areas of AC, what he calls the artificial consciousness lag.

With computing innovations growing exponentially every year, the feasibility of high-power AC is becoming increasingly relevant. As artificial intelligence (hereinafter referred to as AI) moves from the pages of science fiction to the field of science, more and more scientists and philosophers are taking a closer look at it. Many of the world’s leading thinkers, including Stephen Hawking, Elon Musk, and Bill Gates, have recently signed an open letter calling for the use of AI responsibly and for the benefit of all humanity. This statement does not refer to this kind of artificial intelligence (purely intellectual), nor is it related to the so-called “machine question”, which poses the question: “How should we program AI?” i.e. what ethical doctrine should it be taught and why?

Although these topics are interesting and extremely important, there is simply not enough time here to provide an in-depth analysis of these issues. For more on this, see Nick Bostrom, Miles Brundage, and George Lazarus, to name but a few.

We already know that a machine can act intelligently; and that it can use logic to solve problems and find solutions because we have programmed it to do so but doubts about the machine’s capacity for phenomenal consciousness have emerged and are widespread. We are different from machines in that we have feelings, experiences, free will, beliefs, etc. Although most people agree that there is a certain “program” in our genetics and biology, they are certain that they can make their own choices and that an artificial computer program cannot reproduce their first unique personal subjective experience.

However, this statement would not be interesting if there were no chance for the existence of a machine capable of producing powerful AC. The most cited theory of consciousness compatible with strong AC is functionalism. This means that consciousness is defined by its function. It is simplified in theory, but there are some types of functionalism. The theory is known for its association with Alan Turing, Turing machines, and the Turing test. A descendant of behaviorism, he (sometimes) holds a computational view of the mind and that functions are the true parameter of consciousness. He is also to some extent known for failing to explain phenomenal consciousness, qualitative states, and qualia. Though there are many answers to this puzzle, I am in favor of an ontologically conservative eliminativist view of qualitative states. What makes it eliminativist is that I claim that qualia, as they are usually defined, cannot exist. However, I reject the idea that our intuitive understanding of qualia and qualitative states is wrong. The concept of qualia is simply misunderstood. They can be created artificially. This is a sub-theory of the larger functionalism of the virtual machine, according to which a conscious being is not limited to one particular mental state at a time but is always in several states simultaneously. In a virtual machine, this is explained by different systems and subsystems.

The ultimate criterion for a moral agent (and the ultimate requirement for the three-condition theory of autonomy, i.e., acting reasonably) is rationality. This criterion is probably the least controversial for an artificial agent, which is why I put it last. Rationality and logic are the defining features of artificial agents in popular culture. Modern computers and weak AI systems are known for their logic. They perform large computations and can make extremely complex decisions quickly and rationally. However, the rationality of artificial agents is not devoid of controversy. As seen earlier, Searle raises concerns about a machine’s ability to actually think and understand, as he claims that no syntax can equal semantics. I have already covered the Chinese room and my response to this problem, but I would like to highlight once again the polymorphic nature of consciousness and the importance of accounting for qualia and phenomenal consciousness in a theory of consciousness.

It should be mentioned that autonomous rationality and rationality in general are not the same thing. In terms of autonomy, rationality is the act of implanting your will so that you can go beyond your “animal instincts” and live your life according to your own rational rules, it implies thinking before you act. In this respect, the rationality of modern computers and weak AI systems is not autonomous. They have no choice; they simply do what they are programmed for. In some respects, this is related to the deterministic following of algorithms discussed above, as it involves free choice. As we have seen, virtual machines can be quite complex: phenomenally conscious, non-deterministic (“free”), internally intentional, and sensitive (capable of experiencing beliefs, desires, pain, and pleasure). But when all is said and done, it is still a machine. One that, if it were to reach this level of complexity, is designed precisely, rationally, algorithmically, and architecturally, and the “cold rationality” of algorithmic computers combined with consciousness makes it autonomously rational. Its feelings, i.e. its emotions, phenomenal consciousness, ability to experience pain/pleasure, and hence its beliefs and desires would make it ready to overcome hedonistic feelings and make rational and autonomous decisions.

Artificial intelligence (AI) is a very dynamic field of research today. It was founded in the 1950s and is still alive today. During AI development, different ways of research have encouraged competition, and new challenges and ideas have continued to emerge. On the one hand, there is much resistance to theoretical development, but on the other hand, technological advances have achieved brilliant results, which is rare in the history of science.

The aim of AI and its technological solutions is to reproduce human intelligence using machines. As a result, the objects of its research overlap the material and spiritual spheres, which is quite complex. The features of intelligence determine the meandering nature of AI development, and many of the problems faced by AI are directly related to philosophy. It is easy to notice that many AI experts have a strong interest in philosophy; similarly, AI research results have also attracted much attention from the philosophical community.

As the fundamental research of modern science of artificial intelligence, the purpose of cognitive research is to clearly understand the structure and process of human brain consciousness, as well as to provide a logical explanation for the combination of intelligence, emotion, and intention of human consciousness, because artificial intelligence experts facilitate the formal expression of these consciousness processes. In order to imitate human consciousness, artificial intelligence must first learn the structure and operation of consciousness. How is consciousness possible? Searle said: “The best way to explain how something is possible is to reveal how it actually exists.” This allows cognitive science to advance the development of artificial intelligence. Crucially, this is the most important reason why the cognitive turn is occurring. It is due to the synergistic relationship between philosophy and cognitive psychology, cognitive neuroscience, brain science, artificial intelligence and other disciplines, regardless of how computer science and technology develop, from physical symbol systems, expert systems, knowledge engineering to biological computers and the development of quantum computers.

It is inseparable from the knowledge and understanding of the whole process of human consciousness and various factors by the philosophy. No matter whether it is a strong or weak school of artificial intelligence, from an epistemological point of view, artificial intelligence relies on a system of physical symbols to simulate some functions of human thinking. However, its true simulation of human consciousness depends not only on the technological innovations of the robot itself but also on the philosophical understanding of the process of consciousness and the factors that influence it. From the current point of view, the philosophical problem of artificial intelligence is not what is the essence of artificial intelligence, but rather the solution of some more specific problems of intellectual modeling.

Regarding the question of intentionality, can a machine have a mind, or consciousness? If so, can it intentionally harm people?

The debate over whether computers are intentional can be summarised as follows:

- What is intentionality? Is it intentional that a robot behaves in a certain way according to instructions?

- People already know what they are doing before they act. They have self-awareness and know what their actions will lead to. This is an important feature of human consciousness. So, how should we understand that a robot behaves in a certain way according to instructions?

- Can intentionality be programmed?Searle believes that “the way the brain functions to create the heart cannot be a way of simply operating a computer programme”. Instead, people should ask: is intentionality an intelligible spirit? If it can be understood, why can’t it be programmed? Searle believes that computers have grammar but not semantics. But, in fact, grammar and semantics is a two-in-one issue, and they are never separated. If a program can incorporate grammar and semantics together, do we need to distinguish between grammar and semantics? Searle argues that even if a computer copies intentionally, the copy is not the original. In fact, when we have a clear understanding of human cognition and its connection to human behavior, we should be able to program the connection between our mental processes and human brain behavior and input all kinds of people we know about. This is the information that makes a computer “know everything”. However, can we at that time be like Searle said? Is artificial intelligence, not intelligence? Does artificial intelligence have no intentionality and no thought processes because it lacks human proteins and nerve cells? Is intentional copying “intentional”? Is copying an understanding real “understanding”? Is duplication of ideas “thinking”? Is duplication of thinking “thinking”? Our answer is that the basis is different, but the function is the same. Relying on different bases to form the same function, artificial intelligence is just a special way of realizing our human intelligence. Searle uses intentionality to deny the depth of artificial intelligence. Although there is a certain basis when artificial intelligence can simulate human thoughts, even if people think that artificial intelligence and human intelligence are significantly different, then we will feel that this difference is no longer relevant. Searle’s point of view can only riddle the human heart again!

As for the issue of intelligence, can machines solve problems using intelligence in the same way that humans do? Or is there a limit to which a machine can have the intelligence to solve any complex problem?

People can unconsciously use so-called hidden abilities, according to Polanyi, “People know more than they can express”. This involves cycling and warming up, as well as a higher level of practical skills. Unfortunately, if we do not understand the rules, we cannot teach the rules to the computer. This is Polanyi’s paradox. To solve this problem, computer scientists did not try to change human intelligence but developed a new way of thinking for artificial intelligence – thinking by means of data.

Rich Caruana, senior research scientist at Microsoft Research, said: “You might think that the principle of artificial intelligence is that we first understand humans and then create artificial intelligence in the same way, but that is not the case.” He said, “Take planes as an example. They were built long before there was any understanding of how birds fly. The principles of aerodynamics were different, but today our planes fly higher and faster than any animal”.

People today generally think that smart computers will take over our jobs. Before you finish your breakfast, it will have already completed your weekly workload, and they don’t take a break, drink coffee, retire, or even need to sleep. But the truth is, while many tasks will be automated in the future, at least in the short term, this new type of intelligent machine is likely to work with us.

The problem with artificial intelligence is a modern version of Polanyi’s paradox. We do not fully understand the learning mechanism of the human brain, so we let artificial intelligence think like a statistic. The irony is that we currently have very little knowledge of how artificial intelligence thinks, so we have two unknown systems. This is often referred to as the “black box problem”: you know the input and output data, but you have no idea how the box in front of you came to the conclusion. Caruana said: “We now have two different types of intelligence, but we cannot fully understand both.”

An artificial neural network has no language capabilities, so it cannot explain what it is doing and why, and it lacks common sense, just like any artificial intelligence. People are increasingly concerned that some AI operations can sometimes hide conscious biases, such as sexism or racial discrimination. For example, there is a recent software that is used to assess the probability of repeated committing offenses by criminals. It is twice as tough on Black people. If the data they receive are impeccable, their decision is likely to be correct, but most often it is subject to human bias.

As for the issue of ethics, can machines be dangerous to humans? How can scientists make sure that machines behave ethically and do not pose a threat to humans?

There is much debate among scientists about whether machines can feel emotions such as love or hate. They also believe that humans have no reason to expect AI to consciously strive for good and evil. When considering how artificial intelligence becomes a risk, experts believe that there are two most probable scenarios:

- AI is designed to perform destructive tasks: autonomous weapons are artificial intelligence systems designed to kill. If these weapons fall into the hands of the wicked, they can easily cause a lot of damage. In addition, the AI armament race could also inadvertently spark an AI war, resulting in a large number of victims. To avoid interference from enemy forces, “closed” weapon programs will be designed to be extremely complex, and therefore humans may also lose control in such situations. While this risk also exists in special artificial intelligence (narrow AI), it will increase with intelligent AI and higher levels of self-direction.

- AI has been designed to perform useful tasks, but the process it performs can be disruptive: this can happen when human and artificial intelligence goals have not yet been fully aligned, while addressing human and artificial intelligence goal alignment is not an easy task. Just imagine if you call a smart car to take you to the airport at the fastest speed possible, it might desperately follow your instructions, even in ways you don’t want: you might be chased by a helicopter or vomit because of speeding. If the purpose of the super-smart system is an ambitious geo-engineering project, a side effect could be the destruction of the ecosystem, and human attempts to stop this would be seen as a threat that must be eliminated.

As for the issue of conceptuality, there are problems with the conceptual basis of artificial intelligence.

Any science is based on what it knows, and even the ability of scientific observation is linked to well-known things. We can only rely on what we know to understand the unknown. The known and the unknown are always a pair of contradictions, and they always coexist and depend on each other. Without the known, we cannot learn the unknown; without the unknown, we cannot ensure the development and evolution of scientific knowledge. There is a lot of evidence that when people observe objects, the experience that the observer gets is not determined by the light that enters their eyeballs. The signal is determined not only by the image on the observer’s retina. Even two people looking at the same object will get different visual impressions. As Hansen said, when an observer looks at an object, he sees much more than the eyeball touches. Observations are very important for science, but “statements about observations must be made in the language of a particular theory”. “Statements about observations are public subjects and are made in public language. They contain theories of varying degrees of universality and complexity.” This shows that observation requires theory. Science needs theory as a predecessor, and scientific understanding is not based on the unknown. Businesses often lack an understanding of the best options for their business, and consulting services for artificial intelligence are trying to navigate the business with AI.

Impact of Philosophy on the Development and Application of Artificial Intelligence

Despite clear differences in approaches, technology (in general) and philosophy share the same object of interest: people.

The aim of technology development is to solve a specific practical problem in everyday life and thus increase its usefulness for humanity in the near future. But in most cases, the scope of technological development does not go beyond the practical and current problems it addresses. There is simply no need to do so if the problem can be technically solved. Technology always pursues one goal: to be useful. It seems to be a purely instrumental approach (M. Taddeo and L. Floridi, “How AI can be a force for good,” Science, Aug. 2018) that rarely cares about the side effects of its products.

By contrast, philosophy deals not only with current issues and practical aspects of human existence. In order to form the broadest possible view of a particular topic, philosophical analysis examines not only the object of study itself but also its ethical implications and other possible influences on human matters. Part of this is the study of the emergence, development, and nature of values. Therefore, careful analysis and criticism of general positions and current events to find changes in a particular value system is the main task in the field of philosophy.

Philosophy and Technology: Contradiction or Symbiosis?

In short, philosophy usually raises new issues and problems, while the purpose of technology, especially AI, is naturally to solve specific and existing problems. Given this, the symbiosis between these two fields seems paradoxical at first glance.

However, by asking more and more new questions and criticizing proposed technological solutions, especially by examining the underlying problem in a precise philosophical manner, technology can offer long-term and more detailed solutions. Philosophy provides the tools for this anticipatory process, such as logical analysis, ethical and moral examination, and a deep methodology for asking the right questions. To put this in perspective: how will AI impact the future of work?

This definitely complements the forward-looking development of new technologies. When the development process takes into account as many possible outcomes of both the problem and the proposed technical solution as possible, future problems can be solved in a sustainable way. All of this applies to artificial intelligence as a subset of technology, which should now be defined as “the science and technology of creating intelligent machines, particularly intelligent software” (“Closer to the Machine: Technical, Social, and Legal Aspects of AI”, Office of the Victorian Information Commissioner, Toby Walsh, Kate Miller, Jake Goldenfein, Fang Chen, Jianlong Zhou, Richard Nock, Benjamin Rubinstein, Margaret Jackson, 2019).

But the connection between artificial intelligence and philosophy is much more far-reaching.

AI and Philosophy: A Special Connection

The unique connection between artificial intelligence and philosophy has already been highlighted by computer scientist John McCarthy. Although philosophy complements all technical science in general, it is even crucial for artificial intelligence as a special discipline and provides a fundamental methodology for the field.

Philosophers have developed some of the basic concepts of AI. Examples include “…the study of the features that an artifact must possess in order to be considered intelligent” (“Industrial revolutions: the 4 main revolutions in the industrial world,” Sentryo, Feb. 23, 2017) , or the elementary concept of rationality, which also emerged from philosophical discourse.

What is more interesting in this context is the fact that philosophy is required to guide the evolution of artificial intelligence and organize its integration into our lives, as it concerns not only trivial technologies but also completely new and unexplored ethical and social issues.

News

Johns Hopkins Researchers Uncover a New Way To Kill Cancer Cells

A new study reveals that blocking ribosomal RNA production rewires cancer cell behavior and could help treat genetically unstable tumors. Researchers at the Johns Hopkins Kimmel Cancer Center and the Department of Radiation Oncology and Molecular [...]

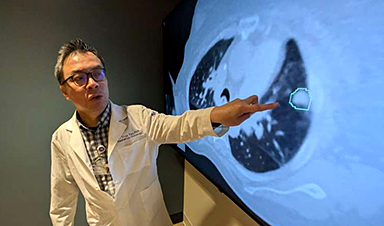

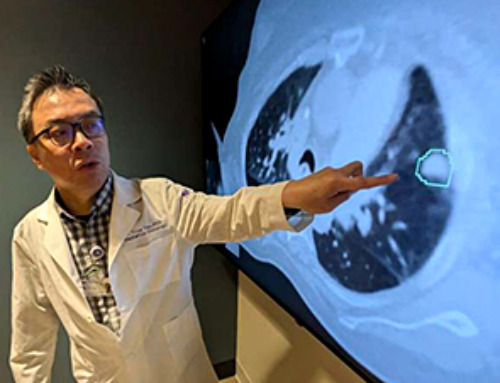

AI matches doctors in mapping lung tumors for radiation therapy

In radiation therapy, precision can save lives. Oncologists must carefully map the size and location of a tumor before delivering high-dose radiation to destroy cancer cells while sparing healthy tissue. But this process, called [...]

Scientists Finally “See” Key Protein That Controls Inflammation

Researchers used advanced microscopy to uncover important protein structures. For the first time, two important protein structures in the human body are being visualized, thanks in part to cutting-edge technology at the University of [...]

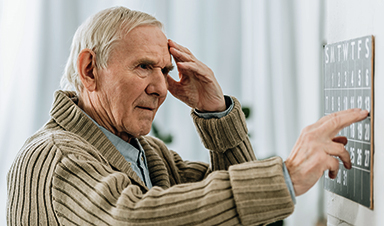

AI tool detects 9 types of dementia from a single brain scan

Mayo Clinic researchers have developed a new artificial intelligence (AI) tool that helps clinicians identify brain activity patterns linked to nine types of dementia, including Alzheimer's disease, using a single, widely available scan—a transformative [...]

Is plastic packaging putting more than just food on your plate?

New research reveals that common food packaging and utensils can shed microscopic plastics into our food, prompting urgent calls for stricter testing and updated regulations to protect public health. Beyond microplastics: The analysis intentionally [...]

Aging Spreads Through the Bloodstream

Summary: New research reveals that aging isn’t just a local cellular process—it can spread throughout the body via the bloodstream. A redox-sensitive protein called ReHMGB1, secreted by senescent cells, was found to trigger aging features [...]

AI and nanomedicine find rare biomarkers for prostrate cancer and atherosclerosis

Imagine a stadium packed with 75,000 fans, all wearing green and white jerseys—except one person in a solid green shirt. Finding that person would be tough. That's how hard it is for scientists to [...]

Are Pesticides Breeding the Next Pandemic? Experts Warn of Fungal Superbugs

Fungicides used in agriculture have been linked to an increase in resistance to antifungal drugs in both humans and animals. Fungal infections are on the rise, and two UC Davis infectious disease experts, Dr. George Thompson [...]

Scientists Crack the 500-Million-Year-Old Code That Controls Your Immune System

A collaborative team from Penn Medicine and Penn Engineering has uncovered the mathematical principles behind a 500-million-year-old protein network that determines whether foreign materials are recognized as friend or foe. How does your body [...]

Team discovers how tiny parts of cells stay organized, new insights for blocking cancer growth

A team of international researchers led by scientists at City of Hope provides the most thorough account yet of an elusive target for cancer treatment. Published in Science Advances, the study suggests a complex signaling [...]

Nanomaterials in Ophthalmology: A Review

Eye diseases are becoming more common. In 2020, over 250 million people had mild vision problems, and 295 million experienced moderate to severe ocular conditions. In response, researchers are turning to nanotechnology and nanomaterials—tools that are transforming [...]

Natural Plant Extract Removes up to 90% of Microplastics From Water

Researchers found that natural polymers derived from okra and fenugreek are highly effective at removing microplastics from water. The same sticky substances that make okra slimy and give fenugreek its gel-like texture could help [...]

Instant coffee may damage your eyes, genetic study finds

A new genetic study shows that just one extra cup of instant coffee a day could significantly increase your risk of developing dry AMD, shedding fresh light on how our daily beverage choices may [...]

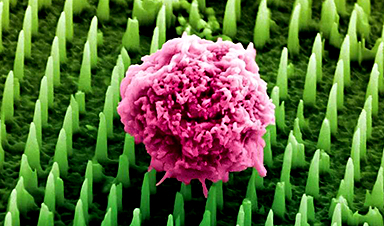

Nanoneedle patch offers painless alternative to traditional cancer biopsies

A patch containing tens of millions of microscopic nanoneedles could soon replace traditional biopsies, scientists have found. The patch offers a painless and less invasive alternative for millions of patients worldwide who undergo biopsies [...]

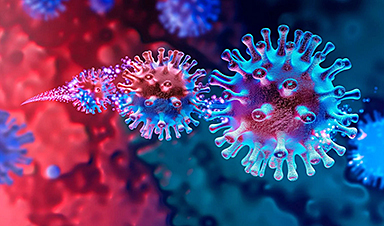

Small antibodies provide broad protection against SARS coronaviruses

Scientists have discovered a unique class of small antibodies that are strongly protective against a wide range of SARS coronaviruses, including SARS-CoV-1 and numerous early and recent SARS-CoV-2 variants. The unique antibodies target an [...]

Controlling This One Molecule Could Halt Alzheimer’s in Its Tracks

New research identifies the immune molecule STING as a driver of brain damage in Alzheimer’s. A new approach to Alzheimer’s disease has led to an exciting discovery that could help stop the devastating cognitive decline [...]