When covid-19 struck Europe in March 2020, hospitals were plunged into a health crisis that was still badly understood. “Doctors really didn’t have a clue how to manage these patients,” says Laure Wynants, an epidemiologist at Maastricht University in the Netherlands, who studies predictive tools.

But there was data coming out of China, which had a four-month head start in the race to beat the pandemic. If machine-learning algorithms could be trained on that data to help doctors understand what they were seeing and make decisions, it just might save lives. “I thought, ‘If there’s any time that AI could prove its usefulness, it’s now,’” says Wynants. “I had my hopes up.”

It never happened—but not for lack of effort. Research teams around the world stepped up to help. The AI community, in particular, rushed to develop software that many believed would allow hospitals to diagnose or triage patients faster, bringing much-needed support to the front lines—in theory.

In the end, many hundreds of predictive tools were developed. None of them made a real difference, and some were potentially harmful.

That’s the damning conclusion of multiple studies published in the last few months. In June, the Turing Institute, the UK’s national center for data science and AI, put out a report summing up discussions at a series of workshops it held in late 2020. The clear consensus was that AI tools had made little, if any, impact in the fight against covid.

Not fit for clinical use

This echoes the results of two major studies that assessed hundreds of predictive tools developed last year. Wynants is lead author of one of them, a review in the British Medical Journal that is still being updated as new tools are released and existing ones tested. She and her colleagues have looked at 232 algorithms for diagnosing patients or predicting how sick those with the disease might get. They found that none of them were fit for clinical use. Just two have been singled out as being promising enough for future testing.

“It’s shocking,” says Wynants. “I went into it with some worries, but this exceeded my fears.”

Wynants’s study is backed up by another large review carried out by Derek Driggs, a machine-learning researcher at the University of Cambridge, and his colleagues, and published in Nature Machine Intelligence. This team zoomed in on deep-learning models for diagnosing covid and predicting patient risk from medical images, such as chest x-rays and chest computer tomography (CT) scans. They looked at 415 published tools and, like Wynants and her colleagues, concluded that none were fit for clinical use.

“This pandemic was a big test for AI and medicine,” says Driggs, who is himself working on a machine-learning tool to help doctors during the pandemic. “It would have gone a long way to getting the public on our side,” he says. “But I don’t think we passed that test.”

Both teams found that researchers repeated the same basic errors in the way they trained or tested their tools. Incorrect assumptions about the data often meant that the trained models did not work as claimed.

Wynants and Driggs still believe AI has the potential to help. But they are concerned that it could be harmful if built in the wrong way because they could miss diagnoses or underestimate risk for vulnerable patients. “There is a lot of hype about machine-learning models and what they can do today,” says Driggs.

Unrealistic expectations encourage the use of these tools before they are ready. Wynants and Driggs both say that a few of the algorithms they looked at have already been used in hospitals, and some are being marketed by private developers. “I fear that they may have harmed patients,” says Wynants.

So what went wrong? And how do we bridge that gap? If there’s an upside, it is that the pandemic has made it clear to many researchers that the way AI tools are built needs to change. “The pandemic has put problems in the spotlight that we’ve been dragging along for some time,” says Wynants.

What went wrong

Many of the problems that were uncovered are linked to the poor quality of the data that researchers used to develop their tools. Information about covid patients, including medical scans, was collected and shared in the middle of a global pandemic, often by the doctors struggling to treat those patients. Researchers wanted to help quickly, and these were the only public data sets available. But this meant that many tools were built using mislabeled data or data from unknown sources.

News

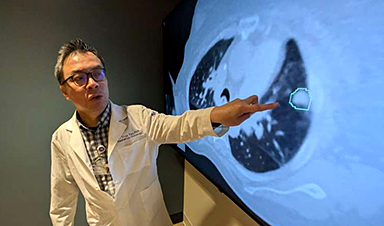

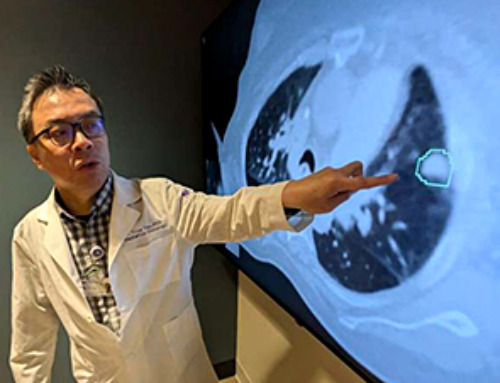

AI matches doctors in mapping lung tumors for radiation therapy

In radiation therapy, precision can save lives. Oncologists must carefully map the size and location of a tumor before delivering high-dose radiation to destroy cancer cells while sparing healthy tissue. But this process, called [...]

Scientists Finally “See” Key Protein That Controls Inflammation

Researchers used advanced microscopy to uncover important protein structures. For the first time, two important protein structures in the human body are being visualized, thanks in part to cutting-edge technology at the University of [...]

AI tool detects 9 types of dementia from a single brain scan

Mayo Clinic researchers have developed a new artificial intelligence (AI) tool that helps clinicians identify brain activity patterns linked to nine types of dementia, including Alzheimer's disease, using a single, widely available scan—a transformative [...]

Is plastic packaging putting more than just food on your plate?

New research reveals that common food packaging and utensils can shed microscopic plastics into our food, prompting urgent calls for stricter testing and updated regulations to protect public health. Beyond microplastics: The analysis intentionally [...]

Aging Spreads Through the Bloodstream

Summary: New research reveals that aging isn’t just a local cellular process—it can spread throughout the body via the bloodstream. A redox-sensitive protein called ReHMGB1, secreted by senescent cells, was found to trigger aging features [...]

AI and nanomedicine find rare biomarkers for prostrate cancer and atherosclerosis

Imagine a stadium packed with 75,000 fans, all wearing green and white jerseys—except one person in a solid green shirt. Finding that person would be tough. That's how hard it is for scientists to [...]

Are Pesticides Breeding the Next Pandemic? Experts Warn of Fungal Superbugs

Fungicides used in agriculture have been linked to an increase in resistance to antifungal drugs in both humans and animals. Fungal infections are on the rise, and two UC Davis infectious disease experts, Dr. George Thompson [...]

Scientists Crack the 500-Million-Year-Old Code That Controls Your Immune System

A collaborative team from Penn Medicine and Penn Engineering has uncovered the mathematical principles behind a 500-million-year-old protein network that determines whether foreign materials are recognized as friend or foe. How does your body [...]

Team discovers how tiny parts of cells stay organized, new insights for blocking cancer growth

A team of international researchers led by scientists at City of Hope provides the most thorough account yet of an elusive target for cancer treatment. Published in Science Advances, the study suggests a complex signaling [...]

Nanomaterials in Ophthalmology: A Review

Eye diseases are becoming more common. In 2020, over 250 million people had mild vision problems, and 295 million experienced moderate to severe ocular conditions. In response, researchers are turning to nanotechnology and nanomaterials—tools that are transforming [...]

Natural Plant Extract Removes up to 90% of Microplastics From Water

Researchers found that natural polymers derived from okra and fenugreek are highly effective at removing microplastics from water. The same sticky substances that make okra slimy and give fenugreek its gel-like texture could help [...]

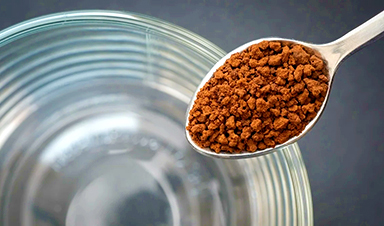

Instant coffee may damage your eyes, genetic study finds

A new genetic study shows that just one extra cup of instant coffee a day could significantly increase your risk of developing dry AMD, shedding fresh light on how our daily beverage choices may [...]

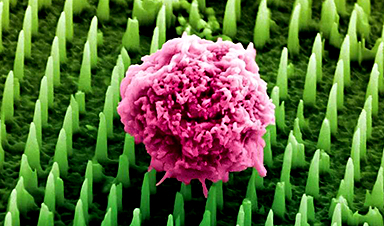

Nanoneedle patch offers painless alternative to traditional cancer biopsies

A patch containing tens of millions of microscopic nanoneedles could soon replace traditional biopsies, scientists have found. The patch offers a painless and less invasive alternative for millions of patients worldwide who undergo biopsies [...]

Small antibodies provide broad protection against SARS coronaviruses

Scientists have discovered a unique class of small antibodies that are strongly protective against a wide range of SARS coronaviruses, including SARS-CoV-1 and numerous early and recent SARS-CoV-2 variants. The unique antibodies target an [...]

Controlling This One Molecule Could Halt Alzheimer’s in Its Tracks

New research identifies the immune molecule STING as a driver of brain damage in Alzheimer’s. A new approach to Alzheimer’s disease has led to an exciting discovery that could help stop the devastating cognitive decline [...]

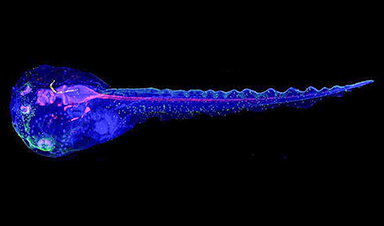

Cyborg tadpoles are helping us learn how brain development starts

How does our brain, which is capable of generating complex thoughts, actions and even self-reflection, grow out of essentially nothing? An experiment in tadpoles, in which an electronic implant was incorporated into a precursor [...]