The European Union will seek to thrash out an agreement on sweeping rules to regulate artificial intelligence on Wednesday, following months of difficult negotiations in particular on how to monitor generative AI applications like ChatGPT.

ChatGPT wowed with its ability to produce poems and essays within seconds from simple user prompts.

AI proponents say the technology will benefit humanity, transforming everything from work to health care, but others worry about the risks it poses to society, fearing it could thrust the world into unprecedented chaos.

Brussels is bent on bringing big tech to heel with a powerful legal armory to protect EU citizens’ rights, especially those covering privacy and data protection.

The European Commission, the EU’s executive arm, first proposed an AI law in 2021 that would regulate systems based on the level of risk they posed. For example, the greater the risk to citizens’ rights or health, the greater the systems’ obligations.

Negotiations on the final legal text began in June, but a fierce debate in recent weeks over how to regulate general-purpose AI like ChatGPT and Google’s Bard chatbot threatened talks at the last minute.

Negotiators from the European Parliament and EU member states began discussions on Wednesday and the talks were expected to last into the evening.

Some member states worry that too much regulation will stifle innovation and hurt the chances of producing European AI giants to challenge those in the United States, including ChatGPT’s creator OpenAI as well as tech titans like Google and Meta.

Although there is no real deadline, senior EU figures have repeatedly said the bloc must finalize the law before the end of 2023.

Chasing local champions

EU diplomats, industry sources and other EU officials have warned the talks could end without an agreement as stumbling blocks remain over key issues.

Others have suggested that even if there is a political agreement, several meetings will still be needed to hammer out the law’s technical details.

And should EU negotiators reach agreement, the law would not come into force until 2026 at the earliest.

The main sticking point is over how to regulate so-called foundation models—designed to perform a variety of tasks—with France, Germany and Italy calling to exclude them from the tougher parts of the law.

“France, Italy and Germany don’t want a regulation for these models,” said German MEP Axel Voss, who is a member of the special parliamentary committee on AI.

The parliament, however, believes it is “necessary… for transparency” to regulate such models, Voss said.

Late last month, the three biggest EU economies published a paper calling for an “innovation-friendly” approach for the law known as the AI Act.

Berlin, Paris and Rome do not want the law to include restrictive rules for foundation models, but instead say they should adhere to codes of conduct.

Many believe this change in view is motivated by their wish to avoid hindering the development of European champions—and perhaps to help companies such as France’s Mistral AI and Germany’s Aleph Alpha.

Another sticking point is remote biometric surveillance—basically, facial identification through camera data in public places.

The EU parliament wants a full ban on “real time” remote biometric identification systems, which member states oppose. The commission had initially proposed that there could be exemptions to find potential victims of crime including missing children.

There have been suggestions MEPs could concede on this point in exchange for concessions in other areas.

Brando Benifei, one of the MEPs leading negotiations for the parliament, said he saw a “willingness” by everyone to conclude talks.

But, he added, “we are not scared of walking away from a bad deal”.

France’s digital minister Jean-Noel Barrot said it was important to “have a good agreement” and suggested there should be no rush for an agreement at any cost.

“Many important points still need to be covered in a single night,” he added.

Concerns over AI’s impact and the need to supervise the technology are shared worldwide.

US President Joe Biden issued an executive order in October to regulate AI in a bid to mitigate the technology’s risks.

News

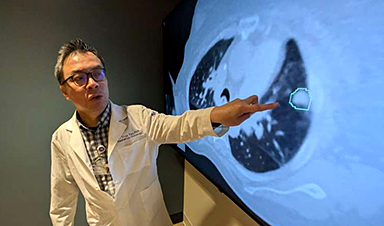

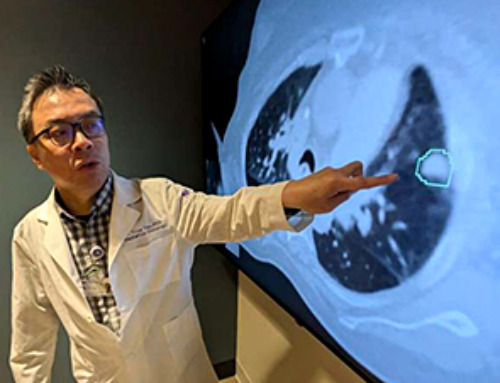

AI matches doctors in mapping lung tumors for radiation therapy

In radiation therapy, precision can save lives. Oncologists must carefully map the size and location of a tumor before delivering high-dose radiation to destroy cancer cells while sparing healthy tissue. But this process, called [...]

Scientists Finally “See” Key Protein That Controls Inflammation

Researchers used advanced microscopy to uncover important protein structures. For the first time, two important protein structures in the human body are being visualized, thanks in part to cutting-edge technology at the University of [...]

AI tool detects 9 types of dementia from a single brain scan

Mayo Clinic researchers have developed a new artificial intelligence (AI) tool that helps clinicians identify brain activity patterns linked to nine types of dementia, including Alzheimer's disease, using a single, widely available scan—a transformative [...]

Is plastic packaging putting more than just food on your plate?

New research reveals that common food packaging and utensils can shed microscopic plastics into our food, prompting urgent calls for stricter testing and updated regulations to protect public health. Beyond microplastics: The analysis intentionally [...]

Aging Spreads Through the Bloodstream

Summary: New research reveals that aging isn’t just a local cellular process—it can spread throughout the body via the bloodstream. A redox-sensitive protein called ReHMGB1, secreted by senescent cells, was found to trigger aging features [...]

AI and nanomedicine find rare biomarkers for prostrate cancer and atherosclerosis

Imagine a stadium packed with 75,000 fans, all wearing green and white jerseys—except one person in a solid green shirt. Finding that person would be tough. That's how hard it is for scientists to [...]

Are Pesticides Breeding the Next Pandemic? Experts Warn of Fungal Superbugs

Fungicides used in agriculture have been linked to an increase in resistance to antifungal drugs in both humans and animals. Fungal infections are on the rise, and two UC Davis infectious disease experts, Dr. George Thompson [...]

Scientists Crack the 500-Million-Year-Old Code That Controls Your Immune System

A collaborative team from Penn Medicine and Penn Engineering has uncovered the mathematical principles behind a 500-million-year-old protein network that determines whether foreign materials are recognized as friend or foe. How does your body [...]

Team discovers how tiny parts of cells stay organized, new insights for blocking cancer growth

A team of international researchers led by scientists at City of Hope provides the most thorough account yet of an elusive target for cancer treatment. Published in Science Advances, the study suggests a complex signaling [...]

Nanomaterials in Ophthalmology: A Review

Eye diseases are becoming more common. In 2020, over 250 million people had mild vision problems, and 295 million experienced moderate to severe ocular conditions. In response, researchers are turning to nanotechnology and nanomaterials—tools that are transforming [...]

Natural Plant Extract Removes up to 90% of Microplastics From Water

Researchers found that natural polymers derived from okra and fenugreek are highly effective at removing microplastics from water. The same sticky substances that make okra slimy and give fenugreek its gel-like texture could help [...]

Instant coffee may damage your eyes, genetic study finds

A new genetic study shows that just one extra cup of instant coffee a day could significantly increase your risk of developing dry AMD, shedding fresh light on how our daily beverage choices may [...]

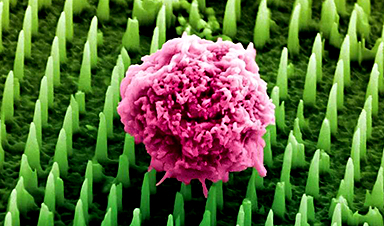

Nanoneedle patch offers painless alternative to traditional cancer biopsies

A patch containing tens of millions of microscopic nanoneedles could soon replace traditional biopsies, scientists have found. The patch offers a painless and less invasive alternative for millions of patients worldwide who undergo biopsies [...]

Small antibodies provide broad protection against SARS coronaviruses

Scientists have discovered a unique class of small antibodies that are strongly protective against a wide range of SARS coronaviruses, including SARS-CoV-1 and numerous early and recent SARS-CoV-2 variants. The unique antibodies target an [...]

Controlling This One Molecule Could Halt Alzheimer’s in Its Tracks

New research identifies the immune molecule STING as a driver of brain damage in Alzheimer’s. A new approach to Alzheimer’s disease has led to an exciting discovery that could help stop the devastating cognitive decline [...]

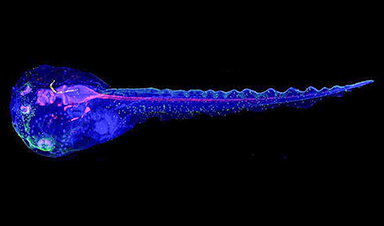

Cyborg tadpoles are helping us learn how brain development starts

How does our brain, which is capable of generating complex thoughts, actions and even self-reflection, grow out of essentially nothing? An experiment in tadpoles, in which an electronic implant was incorporated into a precursor [...]